It’s hard to believe that Stable Diffusion was initially released only a year ago in August 2022. Since then, a new ecosystem has emerged around the open source model, built by a diverse community of researchers, non-profits, corporations and individuals. Creating an enterprise solution for image generation today requires more than just picking a model version — today new customization techniques unlock artists’ creativity in ways that were not possible last year.

All of this innovation around Stable Diffusion would not have been possible had the model remained proprietary. By releasing both the model’s source code and weights under a CreativeML Open RAIL++-M License, a flurry of activity emerged immediately in the community. A similar ecosystem is emerging around open source LLMs such as the Llama lineage with orchestration tools such as LangChain and LlamaIndex, vector databases, security/safety tools such as Guardrails AI or Rebuff and even new disciplines such as prompt engineering. We believe that together these two disparate ecosystems signal a strong future for open source models.

The new image generation ecosystem may be less known than the LLM stack. So today we want to highlight some of the most important players in the open source image generation stack and discuss what new capabilities they add. As you will see, the activity centers in this ecosystem now gradually move away from the base model, and into this ecosystem of techniques, communities, and tools.

Base models are just the beginning

If you’d like to understand the internals of how stable diffusion converts text into an image, we think you’d be hard pressed to find a better resource than Jay Alammar’s Illustrated Stable Diffusion. The second half of his blog points to the importance of the transformer model used to understand the prompt and produce the token embeddings. He states, “Swapping in larger language models had more of an effect on generated image quality than larger image generation components.” In the past year, image generation model researchers have already experimented with using BERT, OpenAI’s ClipText (used in SDv1), Google’s T5 models and Laion’s OpenCLIP (used in SDv2). This experimentation from various organizations is proof that the open nature of the model invites collaboration and rapid progress to improve the quality generated images.

But base models are just the beginning. Although major releases of the base model such as 1.5, 1.6, 2.0, 2.1 and SDXL capture the headlines, supporting auxiliary models is where some of the most interesting innovation is occurring.

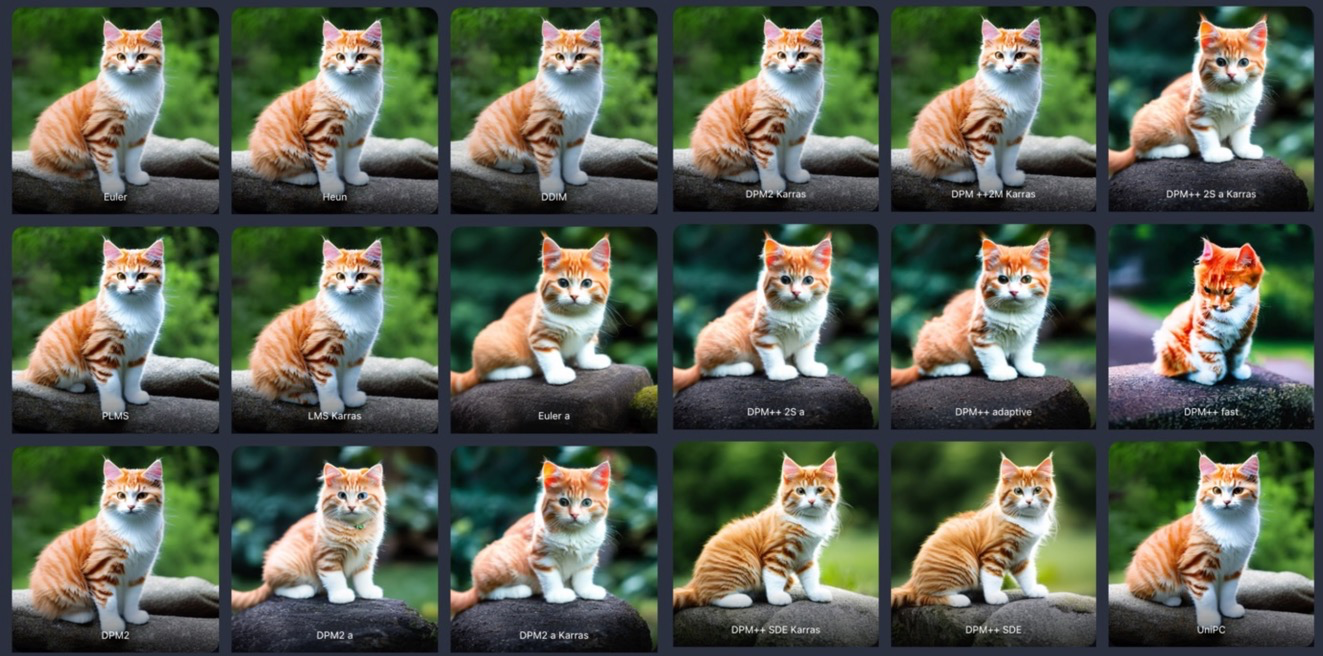

Before there were custom weight techniques to control creativity, there were schedulers to control quality and speed. Schedulers or samplers are internal algorithms used alongside the UNet in stable diffusion that play a key role in the denoising iterative process. One of the early areas of rapid innovation last year with the base stable diffusion model occurred around enabling a variety of schedulers, each making different tradeoffs between inference speed and image quality.

Although there are over 20 schedulers available for Stable Diffusion, the top three in use are DPM++ 2M Karras, DPM++ SDE Karras and Euler a. Each scheduler takes a different number of steps to generate a high quality image. So, Euler may require 64 steps while DDIM may only require 24. Furthermore, some schedulers like Euler a are known to be better at generating images of people. Check out Scott Detweiler’s popular YouTube video comparing different samplers with different step counts.

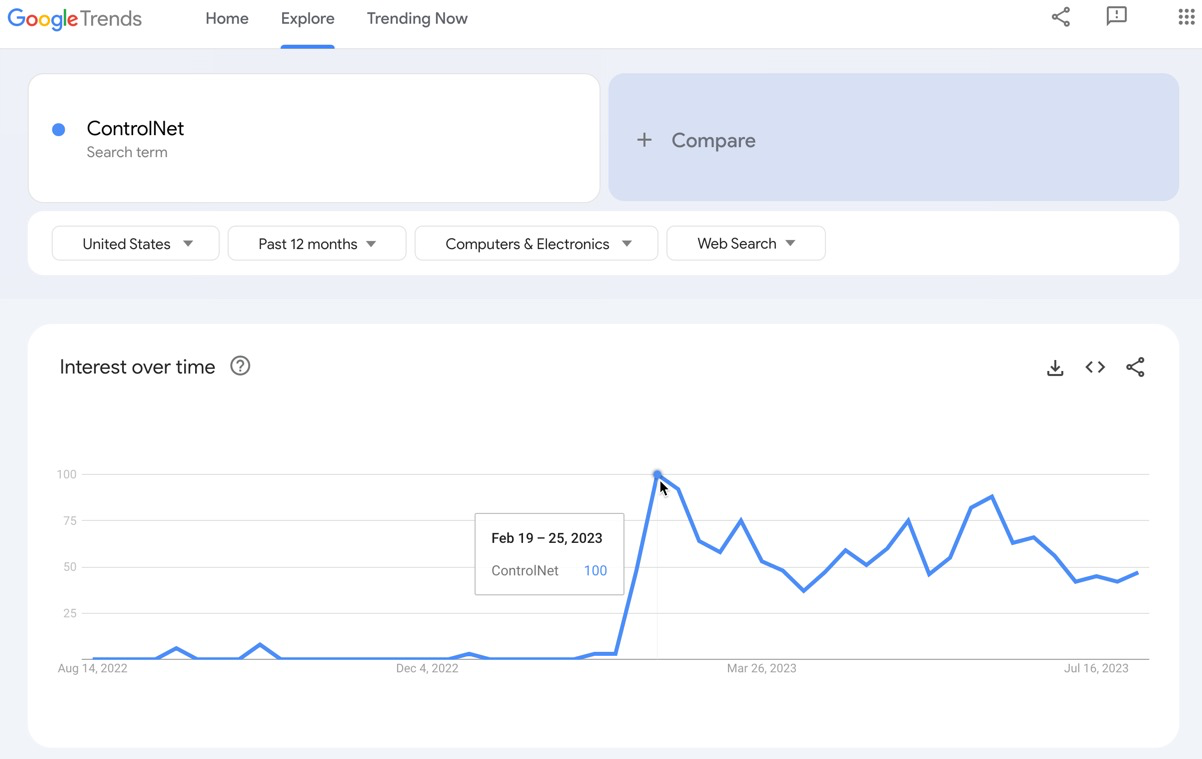

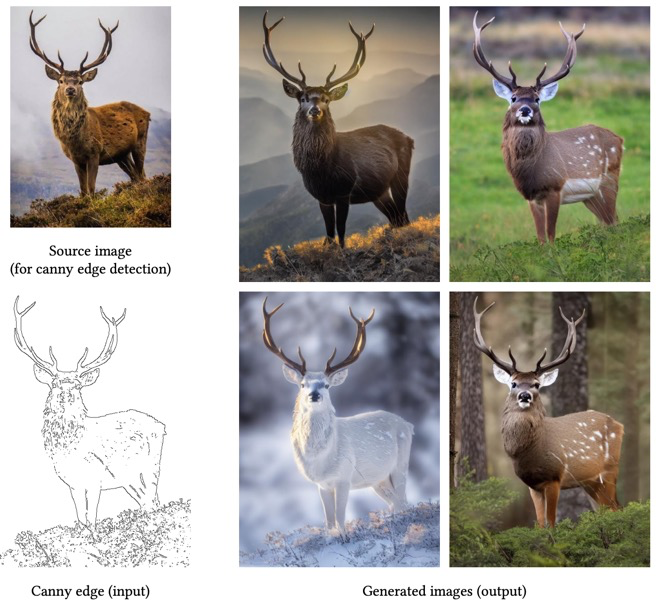

Consider ControlNet, which was released in February 2023 by two Stanford University researchers, to control diffusion models by giving them the ability to go beyond just the text prompt to generate images. With ControlNet’s Automatic1111 extension you can use edge detection or human pose detection to detect the edges of an object in a source image and pass the edge parameters along with a custom text prompt to stable diffusion to generate new images with that object in it. Here’s an example of extracting a deer object and embedding it into generated images:

Using ControlNet to guide Stable Diffusion interior designers can now place different styles of furniture in an image of a living room, and we can create images of ourselves in the poses of superheroes. There are now various permutations of ControlNet:

Controlnet-canny uses canny edge detection (like the deer image above) to place the detected object in different settings

Controlnet-scribble lets you turn your scribbles into detailed photo realistic images

Controlnet-hough uses M-LSD detected edges to generate different styles or themes of the input image

Controlnet-pose yanks the pose of the character in the input image and generates a new image with a character in the same pose

To learn more about ControlNet check out this comprehensive guide and then explore the ControlNet models. One of our favorites at OctoAI is the QR pattern generator to create beautiful custom QR codes.

If you’d like a brief history of the model evolutions that led to Stable Diffusion, Michał Chruściński’s image generation blog does a great job of highlighting the major breakthroughs.

Customization aligns creativity to your use case

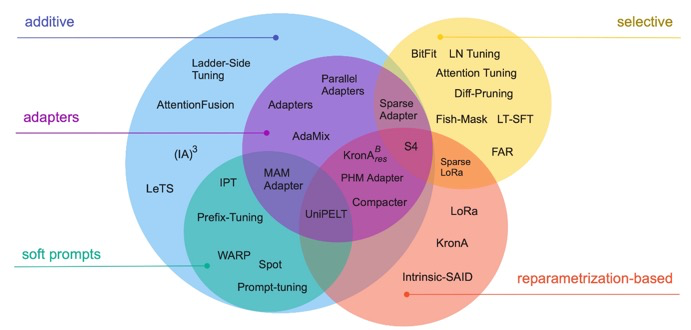

When you hear the words “fine tuning” you may immediately think about customizing LLMs. After all, fine tuning large language models is one of the most active areas of R&D in AI. This summer there has been an explosion in fine tuning techniques that keep most of the layers in the neural network frozen and only customize/train the top few layers to minimize compute costs. There are dozens of fine tuning methods being researched, with parameter efficient fine tuning such as LoRA, Adaptor and prefix tuning particularly buzzy.

In addition to the research in the space, it seems that every LLM vendor is scrambling to bring their own flavor of LLM fine tuning directly into their platform to let their customers unlock the full potential of LLMs.

Similarly, within the image generation space, perhaps no development has been as profound as the explosion of customized stable diffusion models created with fine tuning techniques such as LoRA, Dreambooth and Textual Inversion. This particular innovation for image generation has occurred almost entirely in open source and only around Stable Diffusion. Consider this fact. There is one DALL-E2 model hosted by OpenAI; any custom styles you want to generate can only be declared via the prompt. There are now several new websites that host these customized Stable Diffusion model weights (like checkpoints, LoRAs, inversions) on their platforms, totaling petabytes of downloaded weights each week. The open-source nature of Stable Diffusion gave birth to a community of tinkerers and enthusiasts around it to support creativity for artists that was not possible with MidJourney or DALL-E.

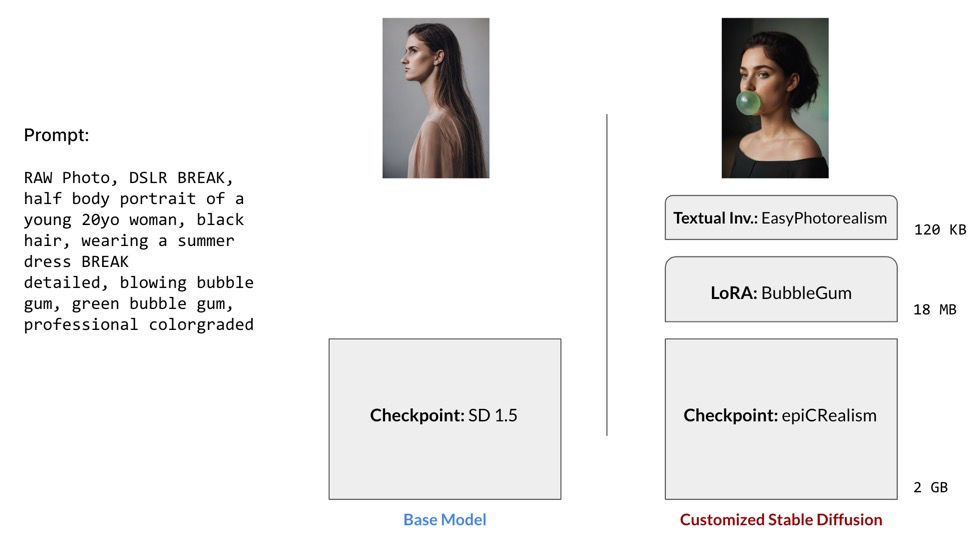

The diagram above demonstrates how customization techniques can be stacked together to generate a final image. Here we are comparing what the prompt generates for a base Stable Diffusion 1.5 model against a custom checkpoint for epiCRealsim, the BubbleGum LoRA and EasyPhotorealsim Textual Inversion.

Here are four of the most common Stable Diffusion customization techniques with pros and cons of each:

Checkpoints customize and capture a full set of the model’s weights from fine-tuning a base model such as v1.5 or 2.1. DreamBooth is the most common technique used for generating checkpoints for few-shot personalization. DreamBooth implants a new (identifier, subject) pair into the diffusion model’s internal dictionary that can then be used in future prompts with the fine-tuned model. Although checkpoints generate high quality custom images, they can be expensive to train and are often several gigabytes in size.

LoRA is an acronym for Low-Rank Adaptation, which initially applied to LLMs. LoRA freezes the pre-trained model’s weights and injects trainable matrices into each layer of the neural network. The output of LoRA fine-tuning therefore is additional model weights rather than a full checkpoint. This makes LoRAs much faster and cheaper to fine-tune with and the generated weights are generally 2 to 500 MB.

- Textual Inversion teaches the model new vocabulary (a “trigger” word) in the text encoder’s embedding space for a specific concept from only 3 - 5 example images. The textual inversion provides new "trigger words" in the embedding space, which are used within the prompt to control the image generation. The concept can be a style, object, person, etc. Textual Inversions can be especially useful for training faces. These are generally in the 10 - 100 KB size range.

- HyperNetworks are a somewhat older technique from 2016 that uses a hypernetwork to generate weights for another network. HyperNetwork files are applied on top of checkpoint models to align the generated image towards a style or theme. These tend to be between 5 - 300 MB.

Frameworks and tools such as Kohya-ss and InvokeAI make it more accessible for artists to generate custom stable diffusion weights using these techniques.

Graphical Interfaces emerge to make customization easy

Did you know that a typical final “masterpiece” image created via a generative model can require the artist to iteratively experiment with hundreds of prompts? An AI artist named Nick St Pierre was quoted in The Economist as saying: “The image I produce isn’t my work. My work is the prompt”. Today there are websites dedicated to hosting massive databases of prompts such as PromptBase and Lexica along with compiled structured files of prompts.

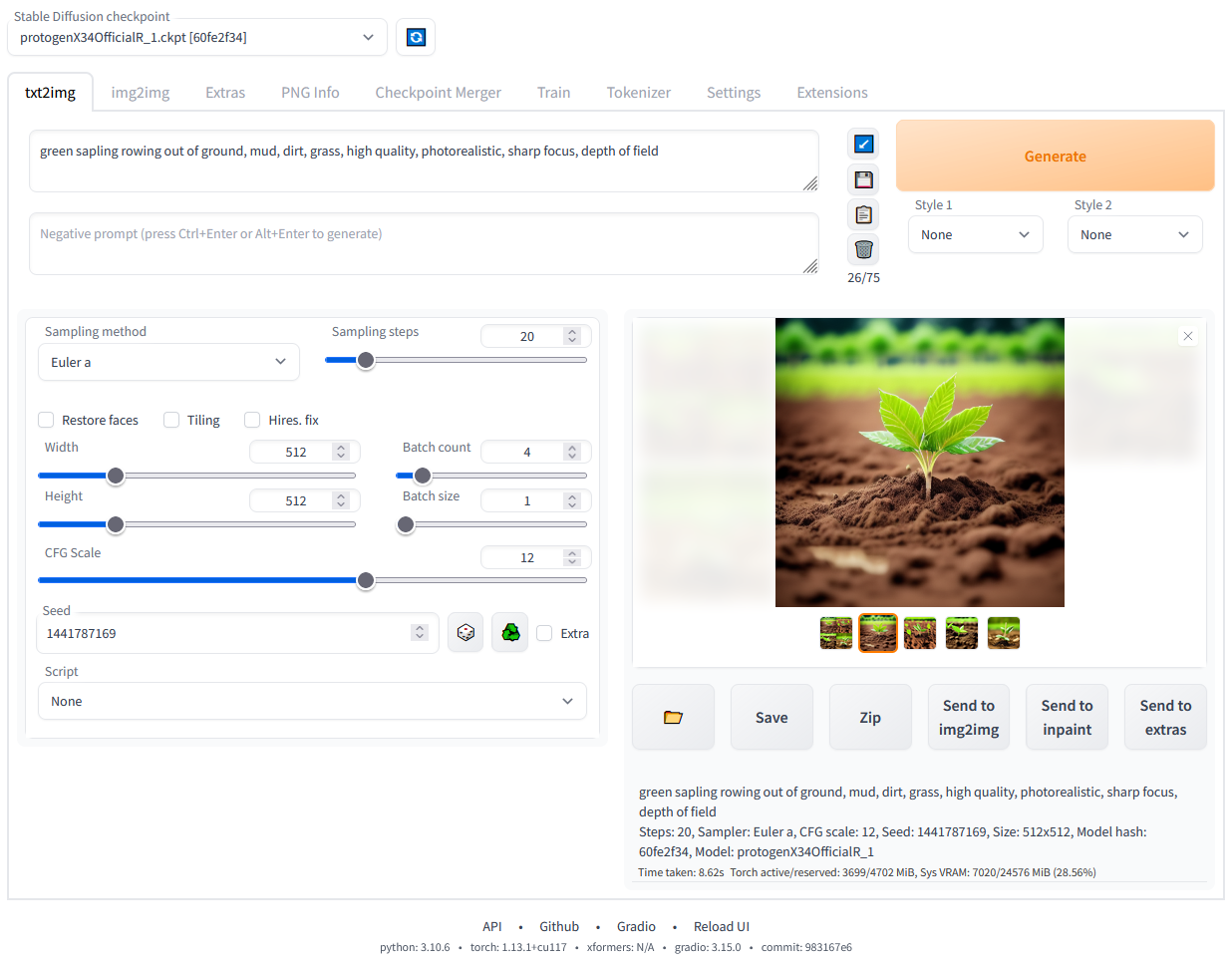

An early leader emerged last year that understood and enabled this new generation of artists using Stable Diffusion: AUTOMATIC1111. Built on Gradio, Automatic1111 is a browser interface that unlocks not only the parameters in the base Stable Diffusion model such as the number of steps, width/height of the image or scheduler but also allows you to experiment with ecosystem tools and techniques. Many of the custom weights for stable diffusion you may encounter were created using Automatic1111. Automatic111 makes it easier to deal with the combinatorial explosion of trying out 5 schedulers with different sampling steps and CFG scales as you experiment and land on the ideal parameters for your custom use case. Most of the images you see today created by Stable Diffusion were likely created using Automatic1111.

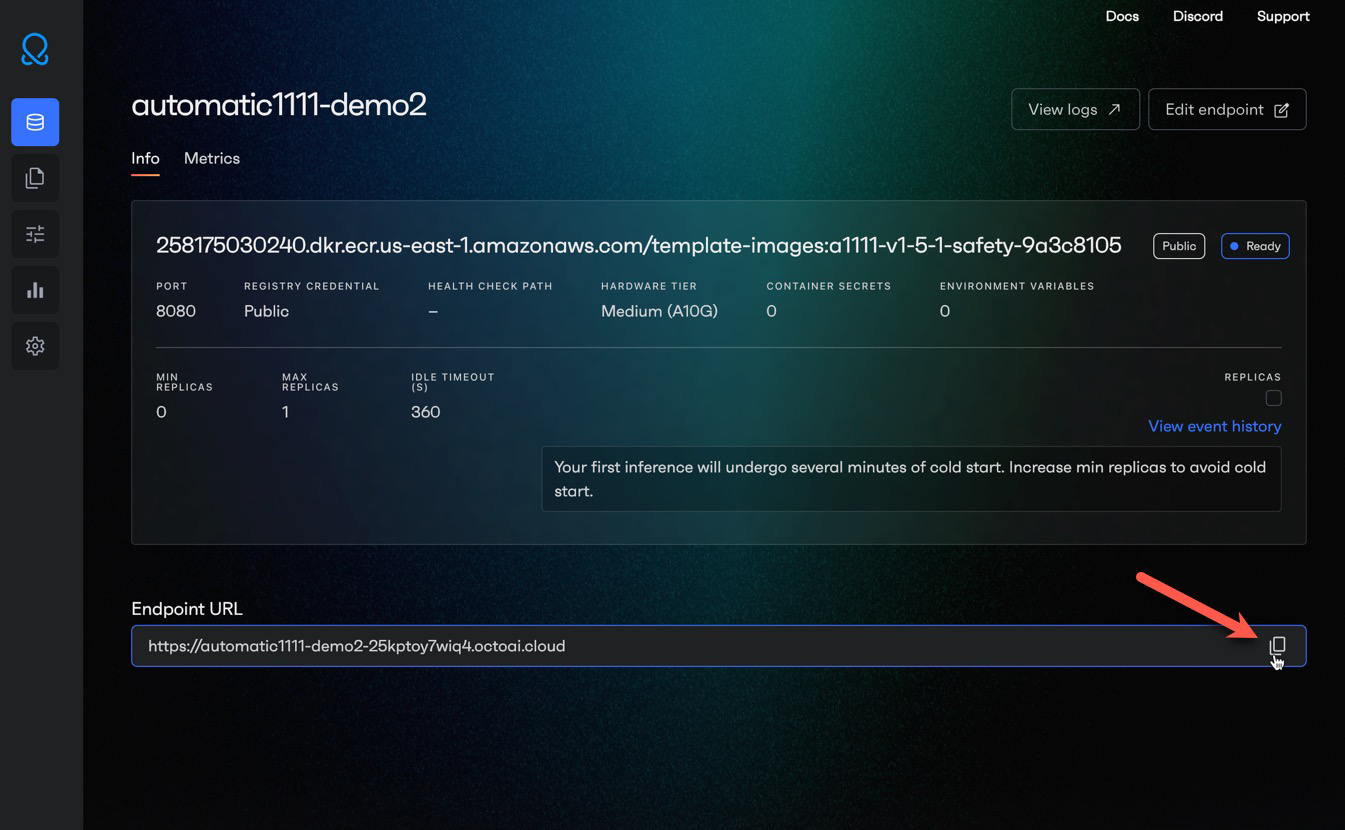

The Automatic1111 UI can connect to a local GPU to generate images. At OctoAI, we containerized Automatic1111 with Stable Diffusion to make it simple to get started with the UI, even if you don’t have an advanced GPU locally. Within seconds you can launch your customer Automatic1111 container and copy+paste the web UI URL into a browser tab. All images will be generated by default using a A10G GPU hosted by OctoAI in the cloud. Importantly, OctoAI runs an accelerated version of Stable Diffusion to generate images faster and at lower cost than unaccelerated versions.

A newer UI that has been gaining popularity this summer is ComfyUI, which brings a flowchart based interface to Stable Diffusion, allowing you to compose different base checkpoints with a custom encoders, samplers, LoRAs, hypernetworks, textual inversions and decoders… essentially giving you minute control over the entire image generation pipeline.

Beyond Image Generation

Along with new ways to generate customized images, Stable Diffusion has enabled a variety of new use cases such as outpainting to generate new parts of the image that lie outside its boundaries, inpainting to replace an object in an image, upscaling, image to image (like taking the human pose from an input image and placing it in the generated image and even video and audio generation. Deforum and Parseq are enabling generation of video and animations, a use case that starts going well beyond image generation.

Investing with the ecosystem

With the launch of OctoAI, we are investing with the image generation ecosystem - from hosting accelerated models (Stable Diffusion v1 & v2, SDXL), to tooling (Automatic1111), to multiple approaches to fine tuning. In fact, we just launched a private preview for fine tuning Stable Diffusion within OctoAI.

Thanks to all of the community that contributed to the evolution of the Stable Diffusion ecosystem in the past year!

Models

Techniques

Prompts

Frameworks

UIs

Related Posts

Since the release of SDXL, our expert ML engineers have been hard at work on an accelerated version of this powerful model. Today, we’re delighted to announce that we’ve delivered the fastest SDXL endpoint on the market.

An all too common story: you’ve started using Stable Diffusion locally (or perhaps online) and the images you’re generating just aren’t that good. That’s because to get really good results, you’re likely going to need to fine tune Stable Diffusion to more closely match what you’re trying to do.

Stable Diffusion XL (SDXL) 1.0 was released by Stability AI this week, and marks a major evolution in the Stable Diffusion image generation quality and experience. OctoAI has rapidly gained adoption as a preferred technology platform for GenAI image generation, and we’re excited to announce that SDXL 1.0 on OctoAI is now available. You can get started with SDXL on OctoAI today from your OctoAI console.

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.