Fast,cost-optimizedLLM endpoints

Quickly evaluate and scale the latest models by leveraging OctoAI's singular API. Our deep expertise in model compilation, model curation, and ML systems means you get low-latency, affordable endpoints that can handle any production workload.

Create AI Agents with function calling

Connect LLMs to external tools

Enable effective tool usage with API calls

Create AI agents

Transform LLMs into an AI agents taking action in your app

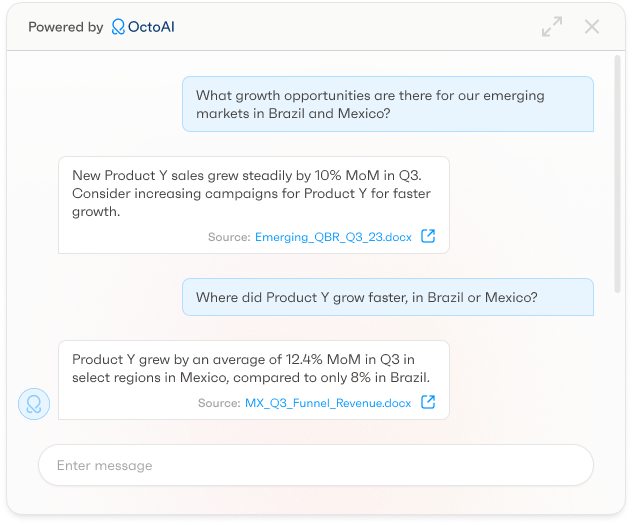

Add context for improved results

Users can interact with your app using natural language and experience better outputs

Easily use real-time data

See how to easily get started with function calling using real-time data with our quick tutorial

# Process any tool calls made by the model

tool_calls = response.choices[0].message.tool_calls

if tool_calls:

for tool_call in tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# Call the function to get the response

function_response = locals()[function_name](**function_args)

# Add the function response to the messages block

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response,

})

Trusted by GenAI Innovators

“Working with the OctoAI team, we were able to quickly evaluate the new model, validate its performance through our proof of concept phase, and move the model to production. Mixtral on OctoAI serves a majority of the inferences and end player experiences on AI Dungeon today.”

Nick Walton

CEO & Co-Founder Latitude

“The LLM landscape is changing almost every day, and we need the flexibility to quickly select and test the latest options. OctoAI made it easy for us to evaluate a number of fine tuned model variants for our needs, identify the best one, and move it to production for our application.”

Matt Shumer

CEO & Co-Founder Otherside AI

Run OSS models and fine tuned models

Build on your choice of OSS LLMs or your own model on our blazing fast API endpoints. Scale seamlessly and reliably without dropping performance.

Robust reliability

Serving millions of customer inferences daily

Adaptive scalability

Growth-ready for your app

Low cost with high performance

Keeping customers and the finance departments happy

Migrate with Ease

OpenAI SDK users move to OctoAI's compatible API with minimal effort

Stay up to date with new models and features

Product & Customer Updates

Introducing the Llama 3.1 Herd on OctoAI

HyperWrite: Elevating User Experience and Business Performance with OctoAI's Cutting-Edge AI Platform

Streamline Jira ticket creation with OctoAI’s structured outputs

A Framework for Selecting the Right LLM

Latest Models

Llama 3.1 Instruct

Qwen2 Instruct

Hermes 2 Pro Llama 3

Llama 3 Instruct

Product & Customer Updates

Introducing the Llama 3.1 Herd on OctoAI

HyperWrite: Elevating User Experience and Business Performance with OctoAI's Cutting-Edge AI Platform

Streamline Jira ticket creation with OctoAI’s structured outputs

A Framework for Selecting the Right LLM

Demos & Webinars

Harnessing Agentic AI: Function Calling Foundations

Watch our on-demand webinar about how to create AI agents using function calling for your AI apps. This technical deep dive has a presentation, demo, and example code to follow.

All about fine-tuning LLMs

Listen on-demand to a panel of experts talking about various fine-tunes available, how to create your own fine-tune, alternatives to custom fine-tunes, and more.

Selecting the right GenAI model for production

Watch our on-demand webinar as our engineers review all steps of model evaluation, testing, when to use checkpoints vs LoRAs, and how to get the best results.

PDF Summarizer

Learn how to build a PDF summary app using NodeJS and OctoAI’s Text Gen Solution. Convert PDFs into summarized TXT files with an LLM.

JSON mode for reliable structured output

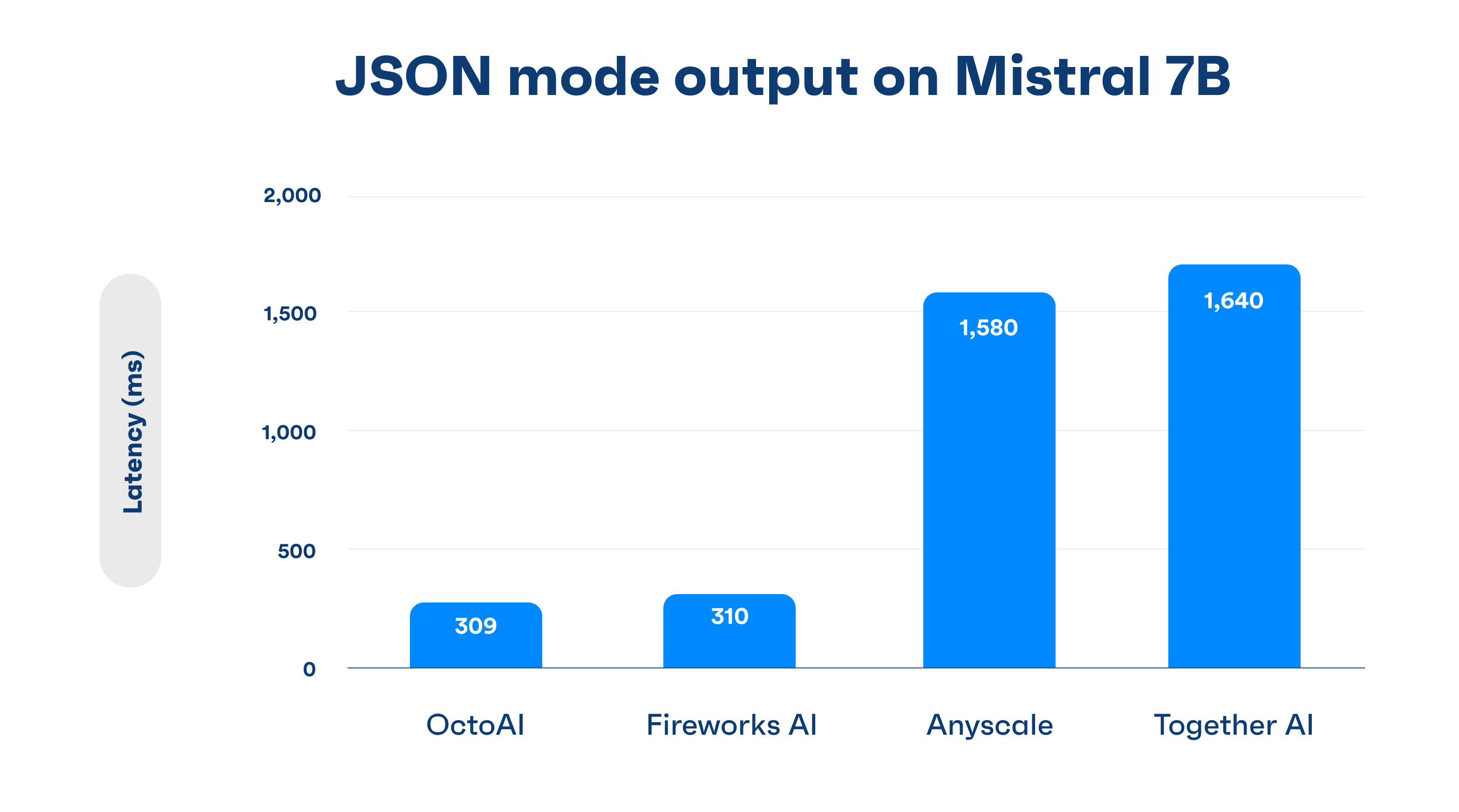

JSON mode is built into leading models on the OctoAI Systems Stack, allowing it to work without disruptions or quality issues. OctoAI has pushed further and optimized JSON mode for industry-leading latency performance.

Build using our high quality and cost effective Mixtral 8x7B & 8x22B models

Our accelerated Mixtral delivers quality competitive with GPT 3.5, but with open source flexibility. Enjoy reduced costs with our 4x lower price per token than GPT 3.5. Migrating is made easy with one unified OpenAI compatible API. We support fine-tunes from the community including the latest from Nous Research.

Text embedding for RAG

Utilize GTE Large embedding endpoint to facilitate retrieval augmented generation (RAG) or semantic search for your apps. With a score of 63.13% on the MTEB leaderboard and compatible API, migrating from OpenAI requires minimal code updates. Learn how.

Build using multiple models for your use case

Using OctoAI you can link several generative models together to create a highly performant pipeline. You can build new experiences specifically for your industry needs using language, images, audio, or your own custom models. Learn how our customer, Capitol AI, was able to work with us to achieve cost savings on their multiple models in production.