We’re excited today to announce the private preview of “bring your own” fine-tuned LLMs on the OctoAI Text Gen Solution. Early access customers can:

host any fine-tuned Llama 2, Code Llama, or Mi(s/x)tral models on OctoAI, and

run them at the same low per-token pricing and latency as the default Chat and Instruct models available in OctoAI.

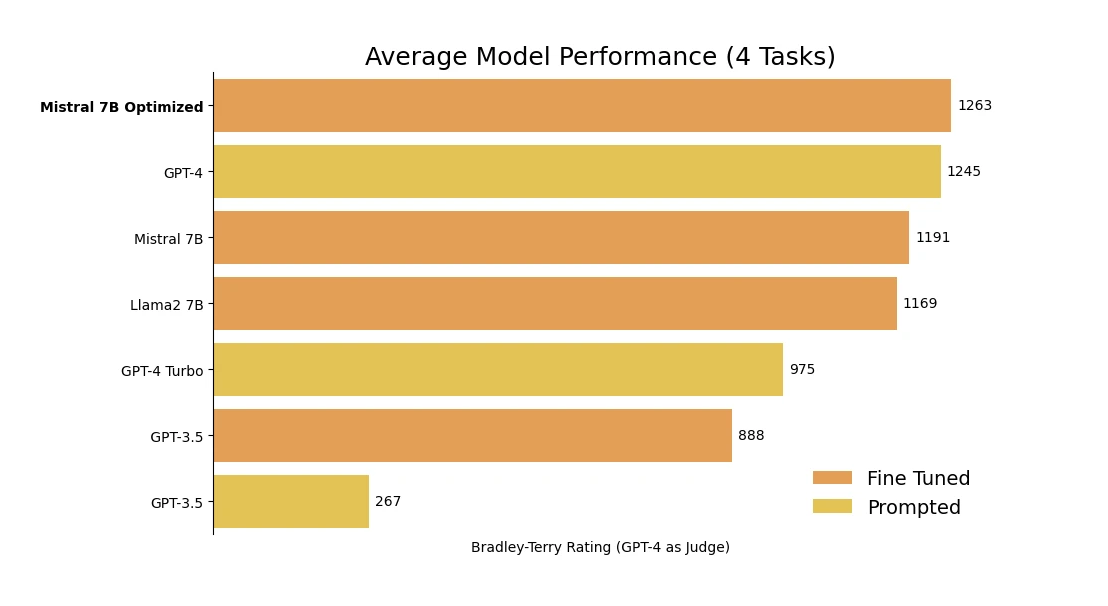

We’ve heard from customers that fine-tuned LLMs are the best way to create customized experiences in their applications, and that using the right fine-tuned LLMs for a use case can outperform larger alternatives in quality and cost — as the figure below from OpenPipe shows. Many are also using fine-tuned versions of smaller LLMs to reduce their spend and dependence on OpenAI. Fine-tuned models like the 13B parameter Nous Hermes from NousResearch are punching well above their weight today in terms of size and cost. But popular LLM serving platforms charge a “fine-tuning tax”, a premium of 2x or more for inference APIs against fine-tuned models.

OctoAI delivers the best unit economics for generative AI models, and these efficiencies extend to fine-tuned LLMs on OctoAI. Building on these, OctoAI offers one simple per-token price for inferences against a model - whether it’s the default models on OctoAI or your choice of fine-tuned LLM. Make the choice based on your application needs, dont let the “fine-tuning tax” skew the decision.

Bring your own fine-tuned LLMs on the OctoAI Text Gen Solution is available in private preview today, please reach out if you are interested.

Fine-tuned models are the key to customized experiences and expanded application

Alongside the adoption of open models and model cocktails, there is a growing recognition that customization of LLM generated outcomes is necessary to provide differentiated experiences and engaging interactions. While approaches like prompt engineering and retrieval augmented generation (RAG) are useful for predictable outcomes and data augmentation, these are no longer sufficient as the scope of application of LLMs expands, and AI powered applications attempt to deliver ever-more realistic and human-like interactions. While one-shot and few-shot approaches with in-context learning (ICL) offer alternatives, studies have shown the limitations of what ICL enables as the required context information, cost constraints, and volume of queries increase - all of which are true for realistic experiences delivered in real world applications to end users.

There are many ways to start evaluating fine-tuned LLMs for your use case. The most common starting point is to use a fine-tuned version that is closely related to your desired use case. This may be from popular model repositories like Hugging Face or through community groups like LocalLLaMA on reddit. You can also start with a base model and create your own fine-tuned model, using datasets from dataset repositories like Kaggle to get started. In time, the dataset templates can be updated using your own application or use case specific data for fine tuning your model.

AI innovators are already delivering differentiated experiences with fine-tuned LLMs

With fine-tuned models, AI-powered applications are able to deliver truly differentiated experiences, and build sustainable differentiation in their respective domains. Innovators on the forefront of this space are already actively building and using fine-tuned models, to power some of the most useful and fast growing AI apps in the market.

The LLM landscape is changing almost every day, and we need the flexibility to quickly select and test the latest options. OctoAI made it easy for us to evaluate a number of fine tuned Llama 2 variants for our needs, identify the best one, and move it to production for our application.

Matt Shumer, CEO & Co-Founder @ Otherside AI

The improvement in end user experience is perceivable, and this is now being validated in a number of quantitative tests and empirical evaluations. The chart below is from OpenPipe, demonstrating how a fine-tuned Mistral 7B model outperforms much larger models (including GPT 4) in specific areas.

Source: https://openpipe.ai/blog/mistral-7b-fine-tune-optimized

Fine-tuned models enable unique generative AI powered experiences in applications. We believe fine-tuned models are key to the next phase of generative AI expansion, and the team has been working to enable developers to cost-effectively adopt fine-tuned models in their applications. The technology that underpins the OctoAI asset orchestrator is key to this, and we’re expanding these capabilities to bring similar efficiencies and flexibility to text generation. More on this in the near future!

Reach out to run your custom fine-tuned LLM checkpoint on OctoAI

Custom checkpoints on the OctoAI Text Gen Solution is in private preview today, please contact us if you are interested in access to the service. You can also get started at no cost, with the built-in LLMs available on the OctoAI Text Gen Service.

In addition, we have a promotional program to accelerate adoption of open source LLMs alongside your existing OpenAI powered applications. Read more about this in the OctoAI Model Remix Program introduction.