In this article

Retrieval Augmented Generation (RAG) and LLM Context Windows

RAG cannot work for image generation — larger data sizes and the need for form

Asset Orchestration brings the ease of the RAG experience to Image Generation

Use Asset Orchestration today, reach out if you would like to be a design partner in its evolution

In this article

Retrieval Augmented Generation (RAG) and LLM Context Windows

RAG cannot work for image generation — larger data sizes and the need for form

Asset Orchestration brings the ease of the RAG experience to Image Generation

Use Asset Orchestration today, reach out if you would like to be a design partner in its evolution

Foundation models are powerful. We’ve seen from early adopters that the best way to truly unlock value through these models requires customization of the model outputs — like including product specific information for a support ChatBot, or location specific details for a travel app. For text generation applications, the Retrieval Augmented Generation (RAG) pattern and the frameworks for it simplify how developers can perform customization by providing application specific context to models, quickly becoming the standard way of adopting Large Language Models (LLMs). But there is no RAG pattern yet for typical media generation. This has limited the extent to which developers can customize AI for media generation.

Given the excitement from customers around Asset Orchestration, we thought we would share a brief overview of why we designed this — starting with how RAG works, why it does not apply to image generation, and how Asset Orchestration enables use-case and context aware image generation.

Retrieval Augmented Generation (RAG) and LLM Context Windows

RAG is the norm for how builders and businesses integrate LLMs into applications. In this approach, application and business specific context information is provided to the model at inference time, allowing for generation to incorporate these additional facts as needed in the output. This information could be datasheets and guides related to specific products, as in the case of the customer service ChatBot we discussed; or detailed and current information about events, locations and prices, as in the case of a travel application.

The key to this approach is the ability for popular LLMs to perform “in-context-learning”: the ability to use information in their input context during generation. The context window is the the additional information is provided to the LLM during inference, as part of the input tokens to generation. The LLM’s context window’s size, which is the total number of tokens that can be passed to the LLM as input, determines the amount of information that can be included. In the RAG architecture, developers use a predefined set of data — often pre-processed and curated in a data store for optimal retrieval based on the input prompt for the query — from which additional information can be retrieved.

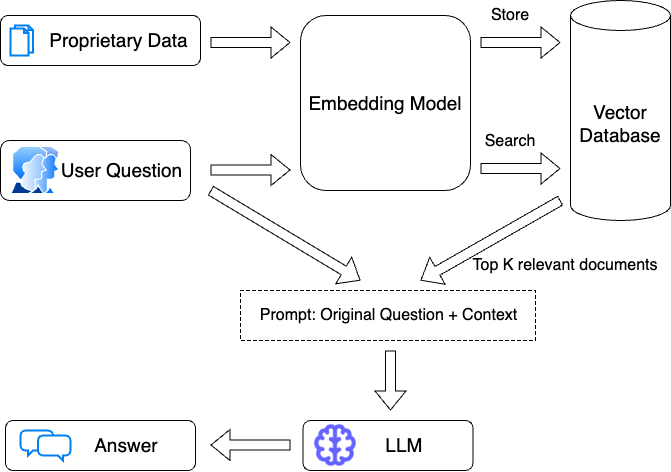

The reference data sources here could be as simple as additional text that captures context information, or more elaborate mechanisms like a pre-optimized search corpus (using TF-IDF, BM25 etc.) or vector database with vector embeddings representing the context info (using Instructor, BERT etc.). The text-to-text generation itself is broken into a multi-step process. The first step queries the data sources and retrieves the relevant information associated with the input text (Retrieval), the second step inserts the retrieved information into the input prompt as part of the inference (Augmented), and the LLM uses information in the prompt to generate a result (Generation). This process helps ensure that LLM outputs are applicable to the specific application and use case, and provide domain and user specific control over the model without requiring costly retraining.

Retrieval Augmented Generation (RAG) architecture, Source: Langcahin Blog

This has been further simplified by frameworks like LangChain and LlamaIndex, which abstract the model specific operations, the retrieval and the prompt inclusion operations, and application construction.. These frameworks enable simple operations like adding documents in the Stuff Document Chain with Langchain, or more complex retrievals using pre-built integrations with vector databases like Pinecone or Elastic.

RAG frameworks enable a templated path or pattern to creating context aware LLM outputs:

prepare data sources that represent the context information needed for the application,

retrieve the appropriate references at generation time,

incorporate information from the retrieved reference sources for generation.

While RAG is not sufficient for all types of customizations (example — text generation in a specific tone, or writing in a new style, cannot be accomplished with RAG), it does address the most frequently seen customization need in text generation — which is to incorporate existing specific information in the outputs.

RAG cannot work for image generation — larger data sizes and the need for form

At this point, one aspect becomes clear. Everything in RAG — be it the corpus retrieval, or the vector stores, or the prompt inclusion approach to providing context to the model — is designed for text generation. Fundamental aspects that make RAG a valuable architecture in text generation are (1) the context windows in LLMs provide an effective way to provide this information to the LLM so it can use this additional data for the generation, (2) many of the customization needs that are commonly seen in the text generation space are to do with incorporation of use case specific data. And neither apply directly to image generation.

Let’s start by briefly looking at GenAI image generation. At the core of GenAI image generation are diffusion models (specifically, the latent diffusion model). Diffusion models work through a process of injecting noise and then iterative denoising to generate its final output. The text prompt provided is used to guide the denoising process through text conditioning, and the output iteratively gets closer to the desired end image based on the images that have been used in the model’s training data.

The inputs to this process are the text prompt, and sometimes (like in SDXL 1.0) additional images to provide a starting base and mask for use cases like inpainting. Beyond this, image models do not have the contextual capabilities that language models do, to apply context to control the generation. Even if there was a way, the size of images makes this challenging. The default 1024x1024 pixel image from Stable Diffusion XL is approximately 1MB in size, and a set of images would add MBs of data to each call. Contrast this with text context, where a 4000 token context window for a Llama 2 is under 0.01 MB in size, making this an efficient and scalable process.

This is where the other challenge emerges, image generation customization is often to do with style. The most common customer need is predictably and consistently applying a specific set of styles to generated images This could be to apply a company’s thematic visuals in images, or creating images in a specific universe for a game, or ensuring images stay within a specific style for consistency. As briefly discussed earlier, a model capacity to create images based is controlled by the set of images used during the training process. While providing an image in context can be used for specific inpainting or cropping use cases, these will not be able to generate new imagery based on that style — like placing your custom character in different settings and actions, or ensuring images have a unique color scheme. Creating new visuals in a specific style actually requires re-training the model, ie, it is not enough to add additional images (or facts) it requires teaching the model how to create new types of images (or form).

Today, there are thousands of pre-created fine-tuning LoRAS and checkpoints that developers can use for their model fine tuning, enabled by innovations like the application of LoRA to text-to-image models and easily accessible communities and repositories to share these creations.

Asset Orchestration brings the ease of the RAG experience to Image Generation

The image generation customization we discussed here requires the model to learn to create new imagery. Common approaches to fine-tuning Stable Diffusion, like LoRAs, checkpoints, and textual inversions, are popular and available in the community, but using these at scale was not possible for application developers. There is an emerging need to apply these customizations to image generation models in a scalable and efficient manner, just like RAG enables with LLMs.

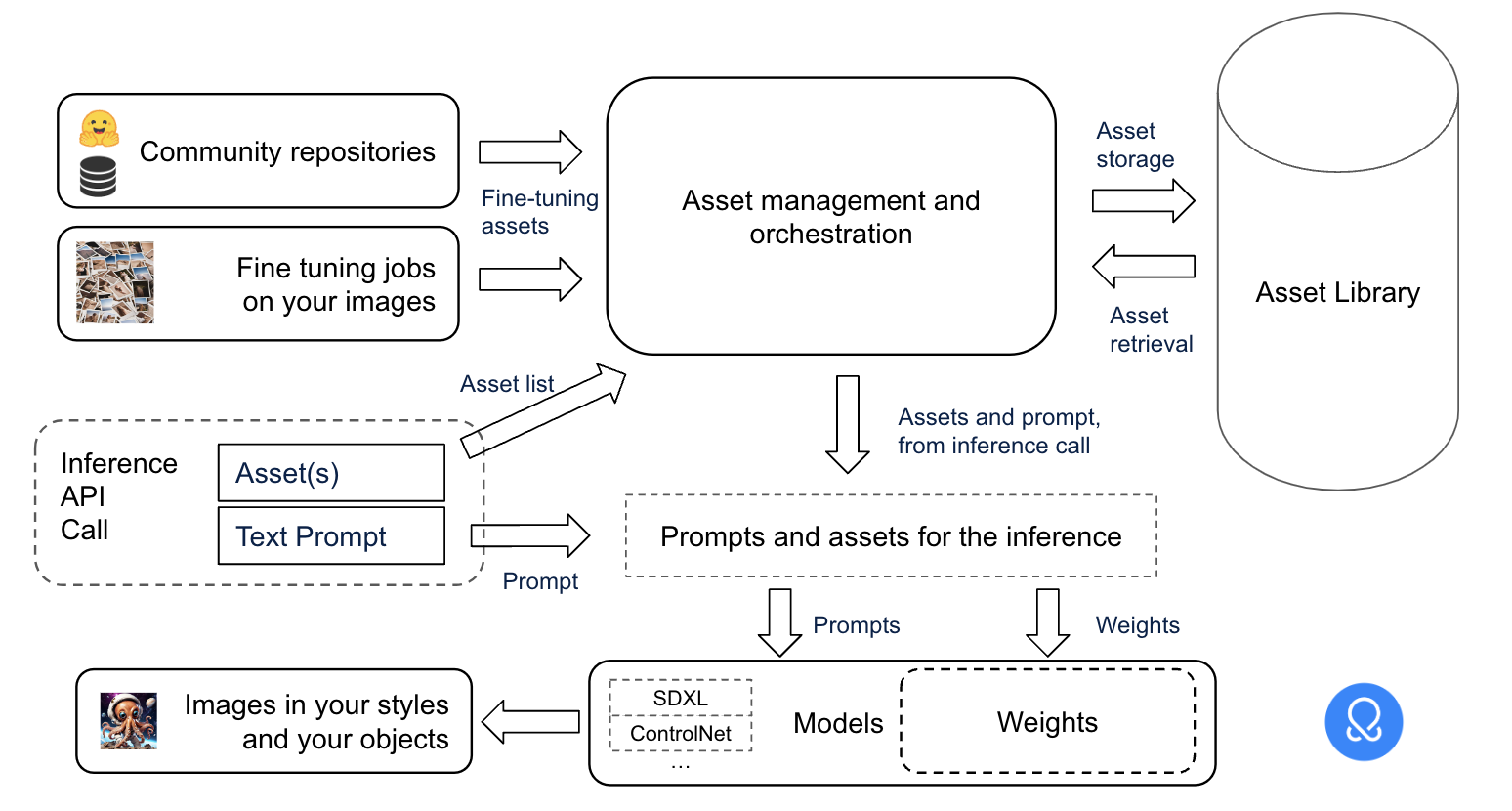

Asset Orchestration addresses this need by dynamically combining assets at inference time, enabling on the fly customization of your image models.. To do this, Asset Orchestration relies on assets — which are fine-tuning datasets like LoRAs and checkpoints, hosted in a customer’s Asset Library. The inference API specifies one or more assets that need to be applied for the specific generation. Based on the selected assets and ratios, the new fine-tuned or retrained model weights are dynamically loaded at inference time. This is enabled by specific capabilities built into OctoAI — including the base accelerated model, intelligent caching, and weights separation from the model — to enable loading of fine-tuning assets ranging from tens of MB sized LoRAs to GB sized checkpoints in an efficient and timely manner.

Asset Orchestration architecture in action, Source: OctoAI

Asset Orchestration is an architecture built from the bottom up to enable customization of media generation models. It enables the live endpoint and the running model to adopt new form and fine-tuned styles at runtime, and has been proven to work at scale with the OctoAI Image Gen Solution. With these, Asset Orchestration enables the same simple path that RAG offers for LLMs, to customize image generation:

prepare the Asset Library with your set of imported or created fine-tuning assets,

retrieve the desired fine-tuning assets at inference time, and

incorporate the fine-tuning assets for the image generation.

Use Asset Orchestration today, reach out if you would like to be a design partner in its evolution

Asset Orchestration is available today in the OctoAI Image Gen Solution. Get started today to create your own fine-tuning assets, import your choice of LoRAs, checkpoints, or textual inversions from the thousands available in popular repos, and mix and match these to create new innovative styles.

We’re working on expanding the application of Asset Orchestration to other domains, and are looking for design partners to help us shape this capability and roadmap. If you have an interesting model customization use case and would like to work with us, reach out!