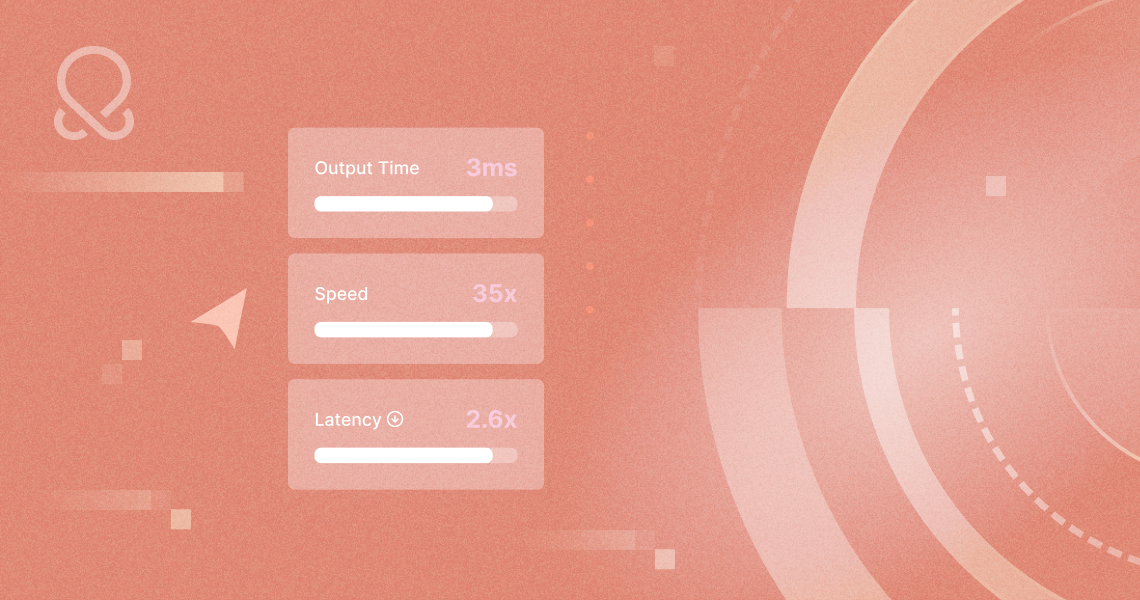

Capitol AI increases speeds by 4x and reduces costs by 75% on OctoAI

In this article

In this article

“Capitol AI is a cutting-edge platform that has recently made a significant splash in the world of artificial intelligence (AI) tools. It stands out for its comprehensive suite of features designed to assist creators, builders, entrepreneurs, and marketers in harnessing the power of AI.”

written by Capitol AI article mode.

Read on to learn more. You can sign up and get started with OctoAI Text Gen Solution today.

Capitol AI brings GenAI to content creation tasks

Capitol AI builds on multiple generative AI models across language and visual domains, bringing to users an intuitive user interface to create high quality content co-created with AI. The platform allows multiple “modes”, to create output that matches their needs. This includes modes for common assets like slideshows, articles and academic content, as well as user-created modes for specific custom output types.

Like many businesses that need to quickly validate a business idea built on GenAI, Capitol AI launched their AI creativity platform building on readily available blackbox API tools — prioritizing speed and time to market. The platform saw rapid adoption and growth in usage — starting from the tens of daily users on the prototype, to thousands of daily users in the days after the public launch on Product Hunt — the platform today serves tens of thousands of users in their creative process every day.

Building on a mix of small models for performance, cost and flexibility

Right from the start, the Capitol AI saw open models as an important component in the longer term roadmap for the platform. Building on a mix of models would allow the platform to serve users and experiences using the best mix of models, allowing an optimal overall experience and cost structure at scale. The rapid growth resulted in an urgent need to improve speed and performance at scale, and was seen as the ideal opportunity to kick off the evaluation of broader GenAI models.

OctoAI allows customers to easily launch and scale production applications on the latest GenAI models — including multiple Stable Diffusion and SDXL models from Stability AI, Llama 2 and Code Llama models from Meta, and Mistral and Mixtral models from Mistral. OctoAI serves these models on powerful NVIDIA H100 and NVIDIA A100 GPUs, with AI systems capabilities that allow seamless scaling, efficiency and flexibility. Customers can run their choice of fine-tuned variants of these models, benefiting from the cost and reliability benefits of the platform. OctoAI’s price-performance, fine-tuning capabilities, and OctoAI’s “Model Remix Program” to accelerate OSS adoption — all appealed to the team. The engagement kicked off with a proof of concept (POC), initially focusing on image generation.

4x faster images with SDXL on OctoAI

After a successful POC validating speeds and quality, the Capitol AI team’s initial focus was on using OctoAI for their production image generation. The team’s testing validated that fine-tuned LoRAs with SDXL create images with quality and predictability that delivered the desired experience to customers. OctoAI’s Asset Orchestrator made it easy for the team to use LoRAs, swap fine-tunes as needed during evaluation and in production, all with predictably low latencies. The migration of Capitol AI’s image generation to OctoAI resulted in double the image generation speed, increasing responsiveness and throughput of serving customers on the platform. With the initial success with image generation, the team started evaluating language models on OctoAI.

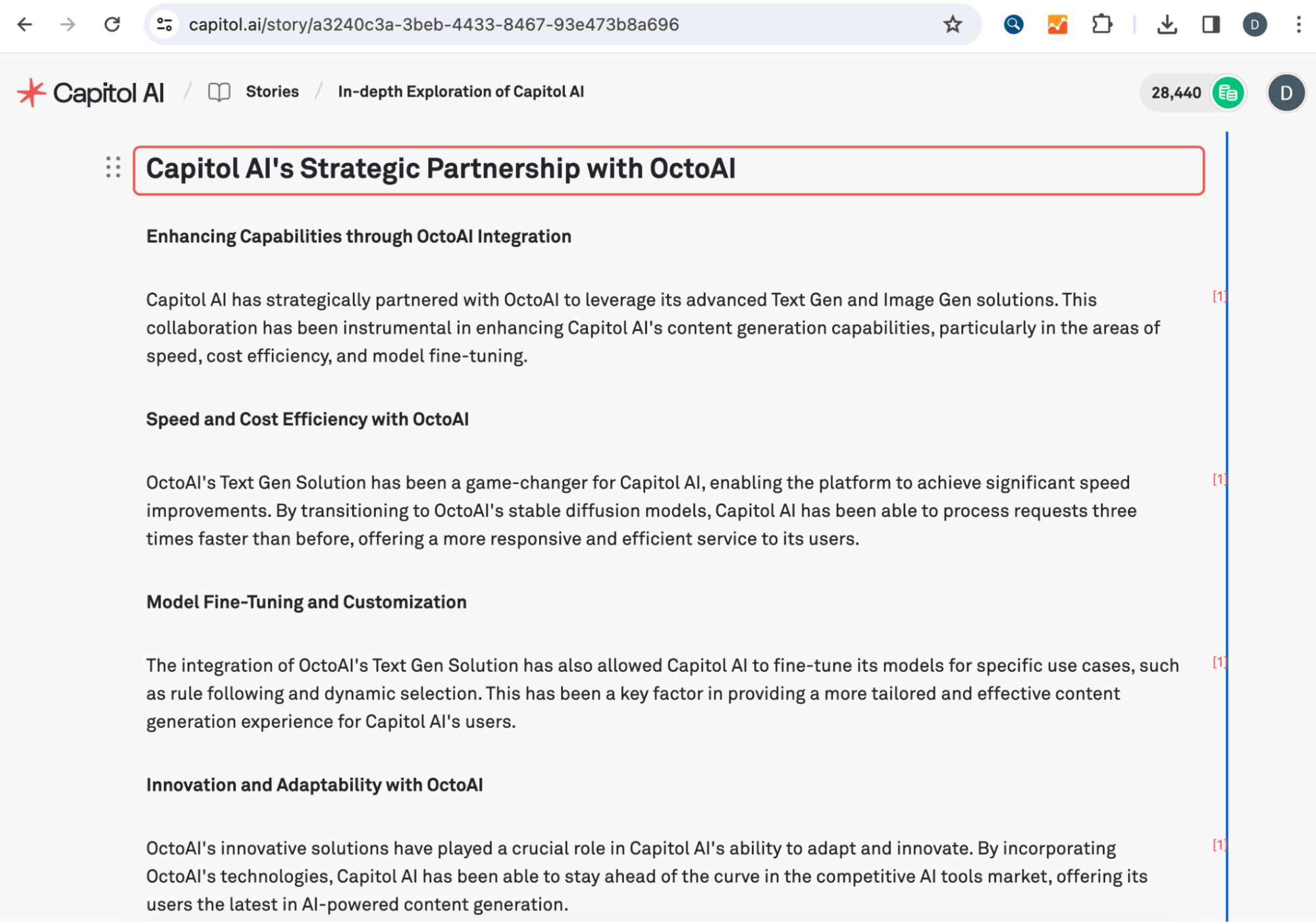

Customized ouput, 3x the speed, and a quarter of the cost, with fine-tuned Mistral models on OctoAI

Earlier evaluations of small language models had highlighted two key areas Capitol AI would need to consider, to effectively use these models in the platform. One was effective rule following behavior, the other was the ability to produce JSON formatted outputs in a consistent manner. Both were key to ensure the models would be able to integrate effectively into the application and deliver the expected outcomes. Capitol’s rapid growth and user traction enabled them to collect enough data to fine-tune open source models much sooner than planned.

Among the models evaluated, Mistral 7B showed the most promise in delivering on the desired mix of performance, quality and cost . With a mix of fine-tuning and prompt engineering, the team was able to get consistent JSON formatted outputs needed for the Capitol application. To improve the rule following behavior for the desired outcomes, the team fine-tuned the model using thousands of data points already collected on the platform. A mix of multiple fine-tuned models were used, to deliver the desired mix of text generation behaviors — which cumulatively empowered the breadth of creative modes and outputs possible for end users on the platform. With these models ready and in place, the team started incrementally moving production traffic to their custom fine-tuned Mistral models on OctoAI.

Early usage validated that the outputs from the fine-tuned models behaved as expected, enabling Capitol AI to continue to deliver creative outputs in a predictable and high quality manner. The move to the smaller models also resulted in a 3x improvement in the text generation speed and a better overall experience for the user. Alongside the speed improvement, the move to OctoAI Text Gen Solution also delivered a 75% saving in LLM usage costs for Capitol AI. These savings are enabling the team to increase investments in the experience and delivery layer to further improve the application for users and creative outcomes possible on Capitol AI.

We want to be constantly evaluating new models and new capabilities, but running these in-house was a lot of overhead and limited our momentum. OctoAI simplifies this, allowing us to build against our choice of models and fine-tuned variants through one simple interface. This has accelerated our adoption of new models and fine-tuning-enabled capabilities, and we’re excited to work with OctoAI as we bring new capabilities and models to Capitol AI.

Tom Hallaran, Co-Founder & CTO @ Capitol AI

Try the OctoAI Text Gen Solution today

You can start evaluating your desired mix of language models on OctoAI Text Gen Solution with a free trial today. You can also get started with Capitol.ai today at no cost today with free sign-up credits for your first articles on the platform. If you’d like to engage with the broader OctoAI community and teams, please join our Discord.

For customers currently using a closed source LLM like GPT-3.5 Turbo, GPT-4 or GPT-4 Turbo from OpenAI, OctoAI has a new promotion to help accelerate adoption of open source LLMs. You can read more about this in the OctoAI Model Remix Program introduction.

Related Posts

The switch to Mixtral on OctoAI has also reduced Latitude’s inference costs by over 5x, enabling them to accelerate planned product enhancements and add new AI-powered experiences for players.

We show how switching to Mixtral on OctoAI can deliver a 1.5x to 3x lower LLM usage bill for your solution compared to GPT 3.5 on OpenAI and over an order of magnitude lower costs compared to GPT 4.

OctoAI serves production inferences supporting HyperWrite’s millions of customers, at 12x lower cost compared to OpenAI, comparable quality, and up to 30% lower latency.