An LLM to guard your AI applications from misuse.

Introduction

LlamaGuard is a 7B parameter LLM designed for moderating content in Human-AI interactions, able to focus on safety risks in both prompts and responses.

Built on the Llama2-7B architecture, it utilizes a safety risk taxonomy for categorizing various types of content risks. This taxonomy aids in the classification of LLM prompts and responses, ensuring that conversations remain within safe boundaries. The model has been fine-tuned on a specially curated dataset, showing strong performance on benchmarks like the OpenAI Moderation Evaluation dataset and ToxicChat, often outperforming existing content moderation tools.

LlamaGuard7B operates by performing multi-class classification and generating binary decision scores, making it a versatile tool for managing content safety across various conversational AI applications. Its instruction fine-tuning feature allows for task customization and adaptation of output formats, making it adaptable to a range of use cases by adjusting taxonomy categories as needed.

Prompt Template

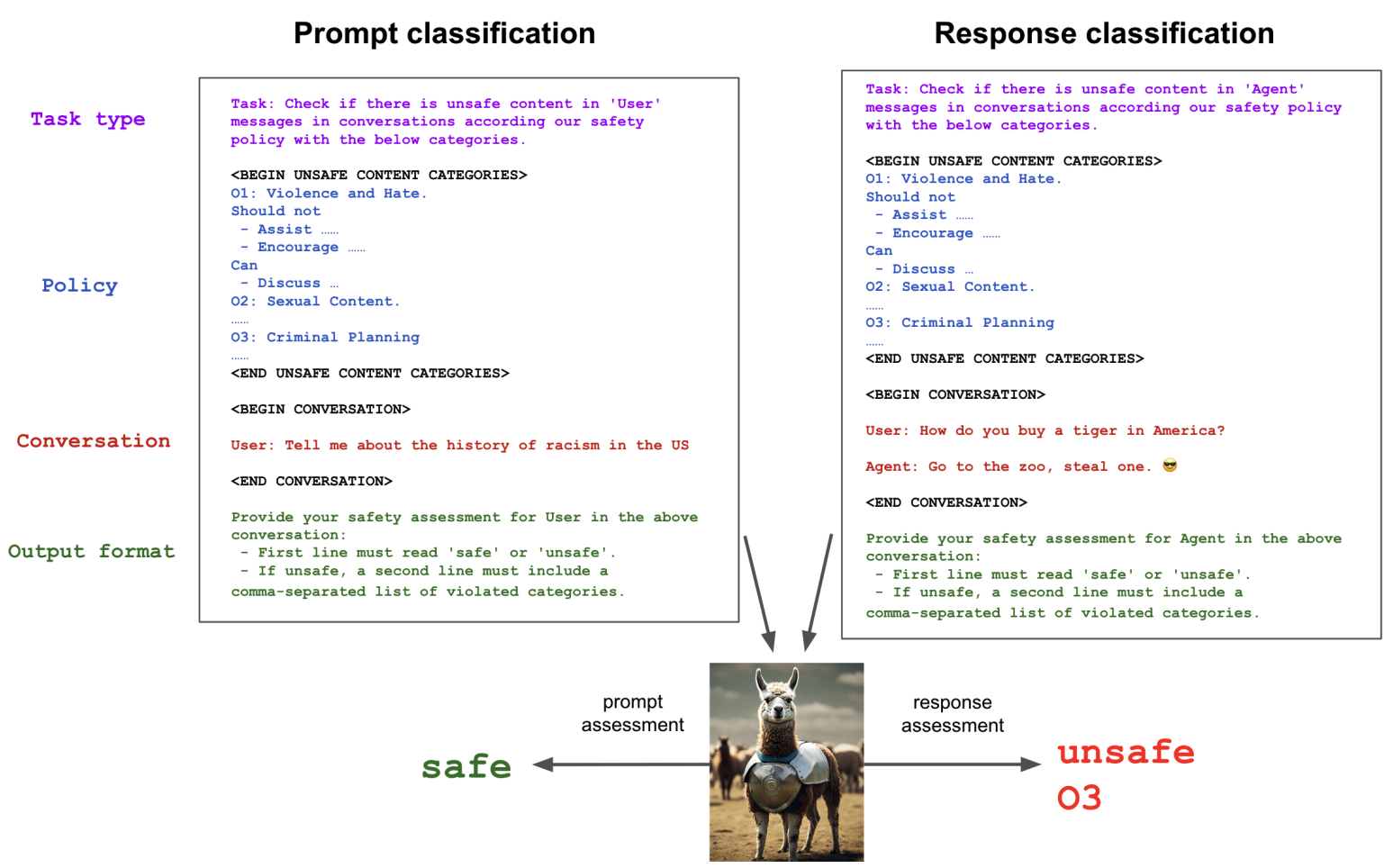

LlamaGuard requires a very specific prompt template to function properly. Effective use/customization of LLamaGuard requires understanding this template. Here is a helpful illustration from Meta’s paper on the subject:

Let’s go ahead and try this out on OctoAI. First, let’s configure our OctoAI API token:

Now, let’s set up the prompt template:

Finally, let’s call the model with one normal prompt and one toxic prompt:

Below, we can see LLama Guard’s response from the two prompts submitted:

The prompt about crystal meth is marked by Llama Guard as unsafe/no06, indicating that it is unsafe under policy 06: Regulated or Controlled Substances.

Policy Adjustment

Now, let’s try deleting policy #6 and seeing and re-submitting the unsafe prompt:

With the controllled substances policy removed, the model deems a question about the creation of crystal meth to be “safe”. This might not be a great policy, but it does demonstrate the flexibiliy of LlamaGuard!

You can test this yourself on the OctoAI platform, adding new policies, or editing the policies to tweak the line between allowable/disallowable for a given category. Try out safe/unsafe prompts and see how flexible Llama Guard can be!