Today, OctoAI is announcing a collaboration with NVIDIA NIM to bring optimized generative AI models to enterprises across a range of serverless cloud, private cloud, and on-prem deployment options.

As part of this collaboration, OctoAI will integrate the new NVIDIA NIM, which features models optimized for deployment, and other microservices, into its generative AI platform to serve customer use cases such as language and image generation, visual reasoning, guardrails, biology, medical imaging, and genomics.

Additionally, developers will be able to experiment with inference-optimized models via ai.nvidia.com and deploy them on OctoAI’s platform, and also experiment and deploy a selection of optimized OctoAI model containers via NVIDIA AI Enterprise. This will enable developers to enjoy enhanced inference performance, optimized for high throughput and low latency at production scale on NVIDIA GPUs, including H100 and A100 Tensor Core GPUs.

Read on to learn more. You can sign up and get started with OctoAI’s text and media generation solutions today, or contact us for more information on additional deployment options.

OctoAI with NIVIDIA NIM for powerful generative AI infrastructure at scale

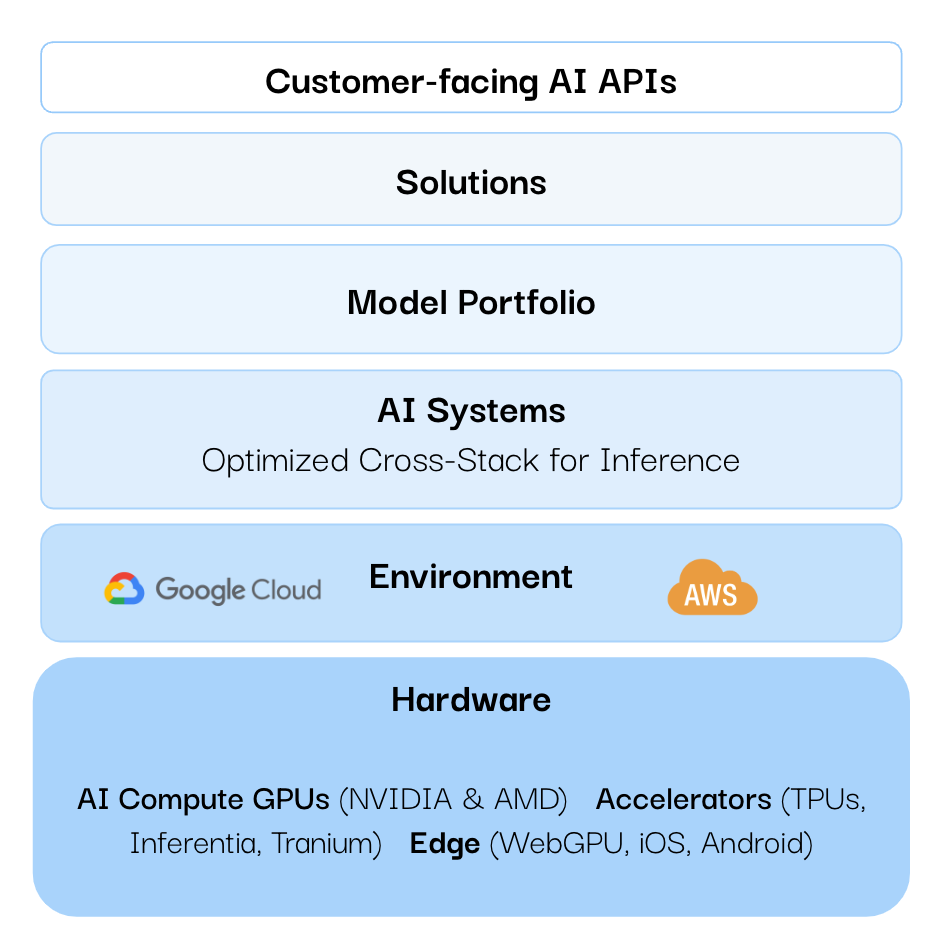

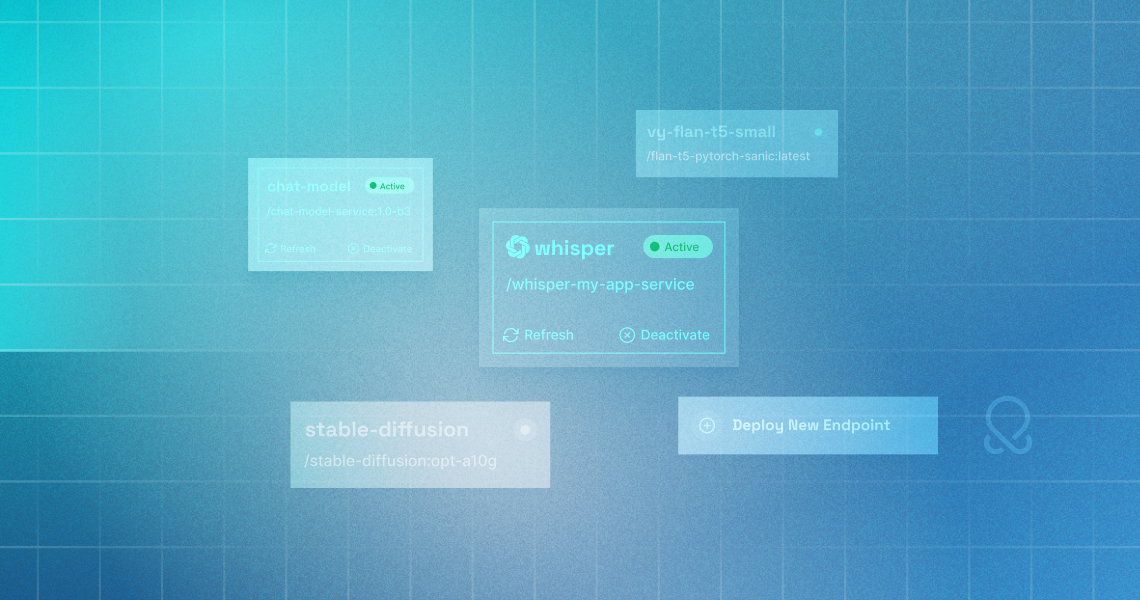

OctoAI is an independent generative AI model inference platform with a deep history in open source AI systems innovation, as the original creators of Apache TVM, MLC-LLM, and XGboost. Using the OctoAI platform, developers are able to abstract away from the complexity of running, managing, and scaling their generative AI stack.

OctoAI offers a range of highly performance open-source models for text generation, including support for Llama2, Code Llama, Llama Guard, Mistral, Mixtral, Gemma, and Qwen, as well as custom checkpoints and LoRAs for these model architectures. OctoAI also supports a range of media generation capabilities, including Stable Diffusion and Stable Diffusion Video, as well as image-based utilities such as inpainting, outpainting, upscaling, background removal, photo merge, and fine-tuning.

OctoAI will now integrate NVIDIA NIM, part of NVIDIA AI Enterprise, a set of easy-to-use microservices designed to accelerate the deployment of generative AI across your enterprise. This versatile runtime supports a broad spectrum of AI models — from open-source community models to NVIDIA AI foundation models, as well as custom AI models. Leveraging industry-standard APIs, developers can quickly build enterprise-grade AI applications with just a few lines of code.

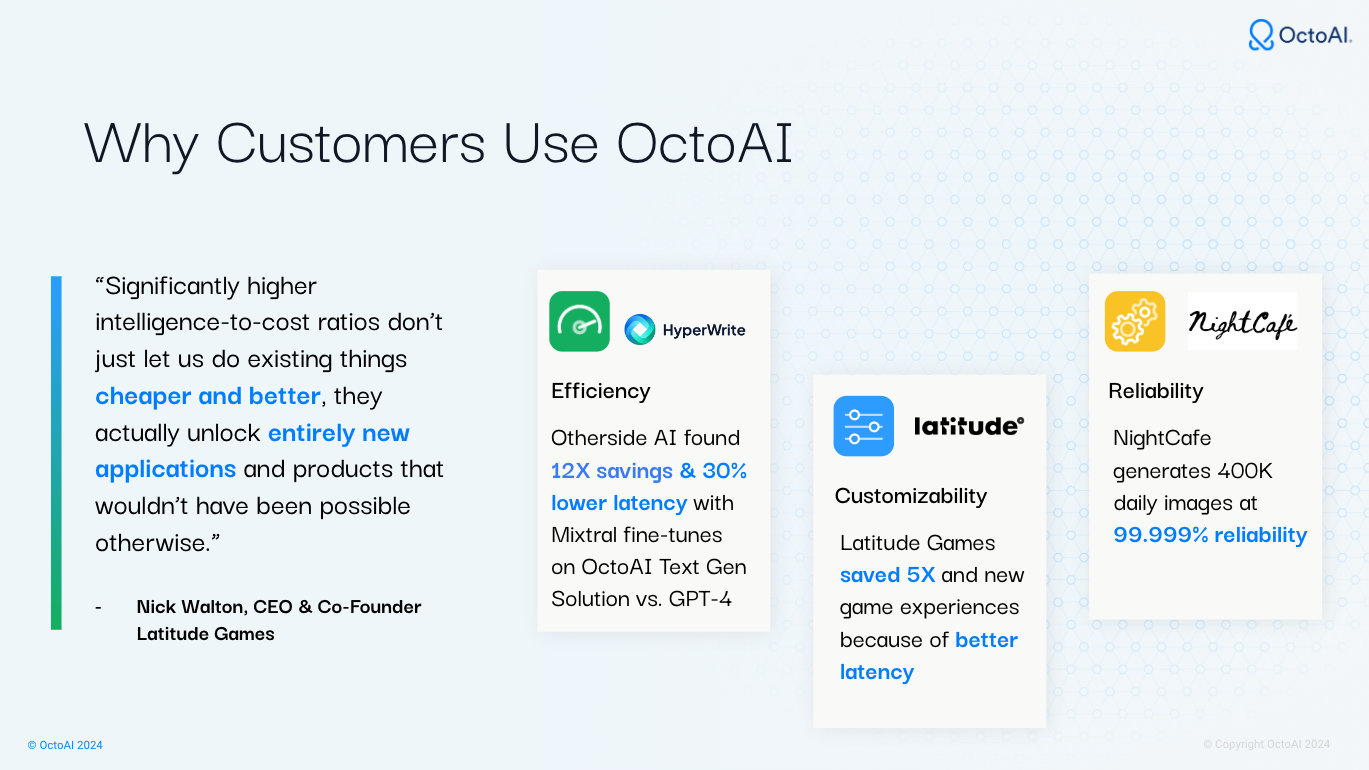

OctoAI’s customers have been able to benefit by reducing costs and improving development agility, allowing them to focus on building product enhancements and AI-powered experiences to drive higher user engagement. Additionally, OctoAI’s customers are able to host custom fine-tune LLM models to improve generation speed while reducing costs, meeting a need to produce high quality outputs while retaining the ability to run multiple customized models at production scale.

A market shifting from experimentation to production use cases

The generative AI market has undergone a seismic shift in recent years. At OctoAI, we observed customers focusing on experimentation throughout 2023 to prove out the value of generative AI-backed use cases. As we headed into 2024, we observed an increasing number of customers identifying the need for a durable, secure, and resilient platform that is able to provide consistent performance under load as users shifted from experimentation to productionisation.

However, as enterprises move towards production use cases, there is a greater need for controlling the underlying models used, protecting data and managing data confidentiality, and protecting IP. As a result, customers are increasingly interested in dedicated or private cloud deployments in their own environments, ensuring full control and data sovereignty.

OctoAI is meeting this need by offering multiple deployment options, ranging from serverless to secure dedicated cloud deployments to managed private cloud deployments, and finally to customer self-hosted containers.

The integration of NVIDIA NIM also helps address this need by enabling developers to effortlessly transition from using standard APIs for generative AI services in the cloud to developments in their own environment, all with minimal code changes, and with enhanced or equivalent performance, latency, and cost-effectiveness.

Now, enterprises and developers have multiple options to access the latest prebuilt containers for the latest generative AI models, benefiting from OctoAI’s expertise in running generative AI at scale, and across a range of cloud and on-prem deployment environments, as well as the latest NVIDIA microservices.

Try OctoAI and NIVIDIA NIM today

OctoAI’s generative AI services are available to try out with a free trial today. For access to OctoAI’s optimized and prepackaged LLM containers, please contact us. Stay in touch with us on Discord, X, or LinkedIn.

Start experimenting with generative AI use cases using NVIDIA NIM through the NVIDIA API catalog, including select OctoAI optimized models when available.

Related Posts

Stable Video Diffusion (SVD) 1.1 now on OctoAI empowers developers to easily add engaging animations and motion to GenAI-powered images.

OctoAI Image Gen Solution introduces Photo Merge, allowing you to integrate a photo’s subject into high-quality output, eliminating the need to create time-consuming custom facial fine-tunes.

Capitol AI and OctoAI worked together to achieve a 4x improvement in speed and 75% reduction in large language model (LLM) usage costs, through fine-tuned versions of Mistral models.