Building the OctoAI Asset Orchestrator — an engineering perspective

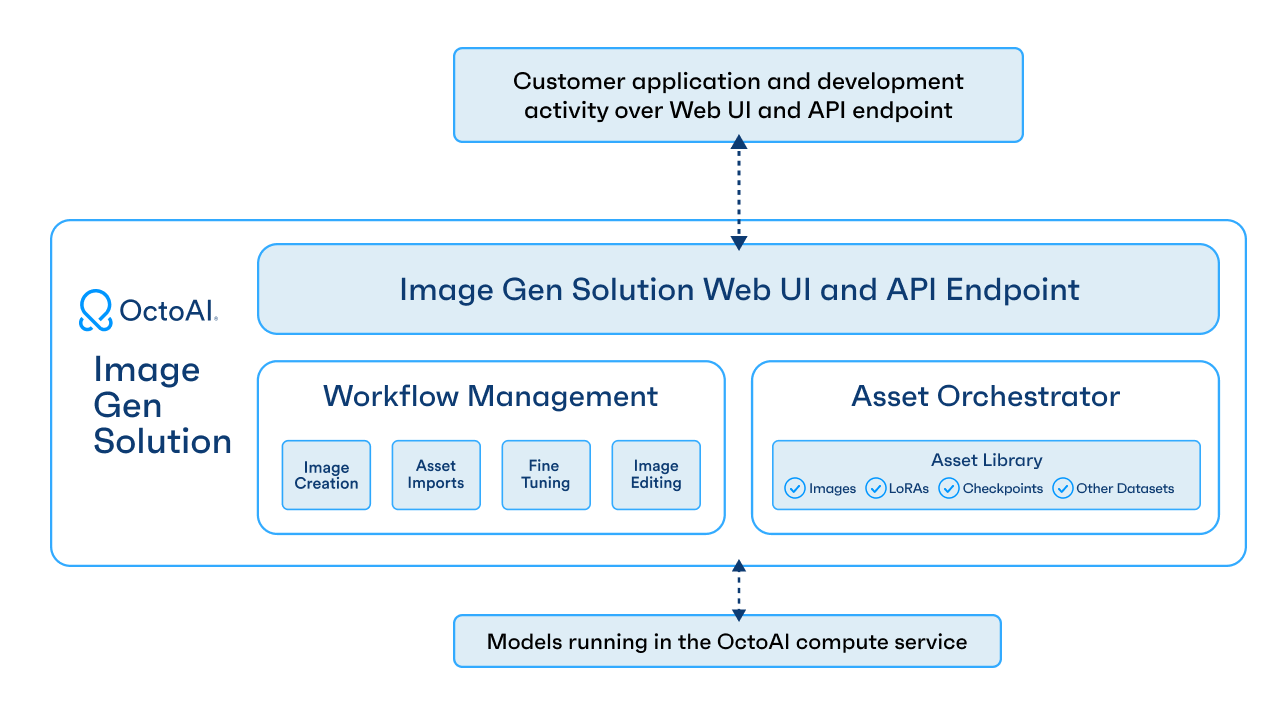

A few days ago, we launched the OctoAI Image Gen Solution - an end-to-end solution for app builders to build differentiated apps using Stable Diffusion XL (SDXL) and other image models. We also talked briefly about the Asset Orchestrator, the underlying technology that enables the customization of images for enterprise use cases. Well, we thought it would be fun to share a bit more about how we thought through the challenges, and some of the key underlying components that we built to enable this experience.

Asset Orchestrator enables customization at scale

Throughout this year, we’ve heard from customers that Stable Diffusion out of the box does not sufficiently address their needs. To make the model useful in real-world applications, customers need to ensure images adhere to consistent styles, capture specific objects, avoid distortions, and are generated with low latency. Before using OctoAI, developers spent lots of time and effort cobbling together tools and capabilities to address these issues, and sometimes teams were focused to abandon this effort all together and just default to using an uncustomized endpoint.

Common approaches to customizing Stable Diffusion, like fine-tuning LoRAs, checkpoints, and textual inversions, are popular in the community, but leveraging these at scale is not easy for app developers today. Customers are forced to make tradeoffs across attributes like over provisioning, slow cold starts, and the extent to which they can use model customization.

Our Asset Orchestrator addresses exactly this challenge. Our unique technology enables peak performance and reliability in dynamically loading assets from the asset library to the customer’s SD or SDXL endpoint. The asset library includes assets pre-populated by OctoAI, assets imported by the customer, or assets created out of OctoAI fine-tuning. Customers can manage and orchestrate across 100s to 1000s of assets in their asset library, and use them in image creation as needed - with virtually 0 cold start, no over provisioning, and proven scalability.

Our approach — a layered cake architecture with customization in mind

Layer 1: Compiler optimization

The OctoAI team comes from a deep background in Machine Learning and compiler optimization, having founded seminal technologies like Apache TVM, XGBoost, MLC-LLM, and more. We apply this expertise to optimize generative AI models like Stable Diffusion, lowering the latency and cost of running such models. We then pass on our cost savings to our users.

Our unique approach to compiler-driven model optimization is not only faster than the rest of the market, but more importantly preserves the freedom for users to customize the model, e.g. using different assets like checkpoints and LoRAs, samplers, style_presets, and resolutions. When using custom versions of Stable Diffusion on OctoAI, the same compiler optimizations are applied as for base SDXL.

Layer 2: Asset Orchestrator with intelligent caching and data transfer

Through a combination of multi-layer caching, intelligent routing and accelerated data transfers, the Asset Orchestrator works to ensure that desired assets, be they small textual inversions or large multi-gigabyte checkpoints, are readily available for models to use as image generation requests come in. A large number of assets can be simultaneously cached, and caching is shared across model instances, so cold asset retrievals over the network are minimized. Likewise, high-traffic assets are kept fully cached at all times to virtually eliminate asset load times for the most popular stylizations.

Layer 3: Systems-level IP

At the systems level, we built technical IP such that our users can benefit from (1) reliable uptime and generation speed at scale (2) virtually 0 cold start, (3) and the lowest price point possible. Across millions of customer image generations a day, we have maintained 99.999% uptime and tight latency bounds. For example, a K6 load test that we ran on our default SDXL image generation workload (30 steps, 1024x1024 resolution, DDIM sampler) reported a latency average of 2.86 seconds; a latency min of 2.85 seconds; and a latency max of 2.88 seconds. This means our generation speed at scale is very consistent and reliable for our users.

In addition, Image Gen Solution users benefit from virtually 0 cold start because they all call the same inference endpoint that is backed by a large pool of hardware resources. Users don’t have to wait for an endpoint to start from a cold state (provision hardware, pull a container image onto the hardware, load model weights, etc.). Furthermore, we built a unique load balancing approach that has enabled us to achieve near 100% hardware utilization across our whole hardware fleet. This means we’re able to achieve economies of scale and pass cost savings to our users. Users only pay for their inferences they incurred, rather than the price of reserving an entire GPU.

Try the OctoAI Image Gen Solution today

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.