Stable Diffusion at scale: Designing for millions of daily image generations

Generative AI workloads are far more computationally heavy than the leading deep learning models from five years ago - even the most optimized Stable Diffusion XL endpoints can take seconds to generate an image. Running generative models in the cloud requires reliable infrastructure that can load and run these large models efficiently, and scale up and down quickly to handle bursts of traffic. Some OctoAI customers today generate over a million Stable Diffusion images a day. OctoAI is built to support this kind of scale and burstiness in a predictable manner. In this blog, we’ll share three challenges we tackled while building our AI platform: intelligent request routing, efficient auto-scaling and fast cold starts.

At a high level, both traditional web servers and generative AI workloads accept requests from clients and dispatch them to backend servers for processing. However, generative AI workloads have different characteristics than traditional web traffic. Specifically, web traffic backends are served well by L4/L7 load balancers for thousands of concurrent requests that may take 50 to 100 ms to process. However, L4/L7 load balancers are push based - they maintain a list of worker nodes and use round robin or other scheduling algorithms to fan out incoming web requests to each worker. Now consider generative AI workloads: each inference can take seconds to process and concurrency needs to be handled more precisely due to GPU and deep learning framework software constraints.

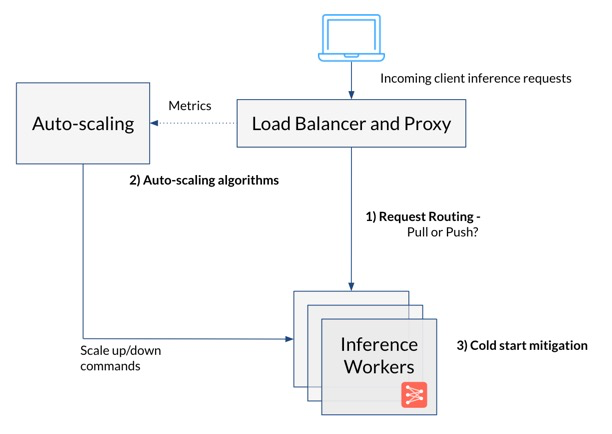

To address these unique properties, there were three key architecture decisions we needed to consider as we build the OctoAI platform:

What is the most optimal way for incoming inference requests to be routed to workers to keep all workers uniformly busy? For GPU inference we aim to run them as hot as possible, distributing the pending work efficiently to minimize GPU idle time.

How do we implement the auto-scaling algorithm to launch new workers quickly when needed but not react to transient noise?

Once a new worker is added, how can we reduce the cold start time to make it ready for inference as fast as possible?

Request routing

In the context of building ML inference client-server systems, request routing is the process of directing client inference requests to the appropriate model container that can fulfill those requests. The goal of designing such a system is to maximize both model container utilization and minimize latency.

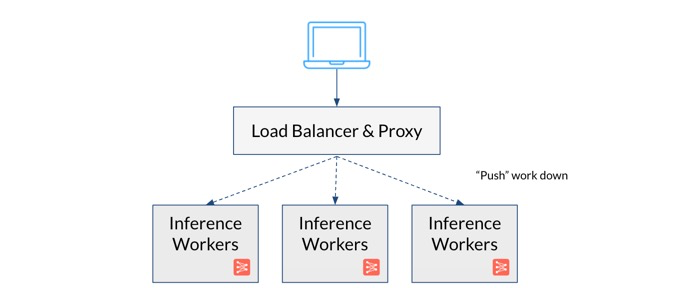

“Push”-based Routing

Our initial implementation used a L7 load balancer that pushed requests down to the appropriate worker node. While this approach worked, it suffered from a load skew issue that caused some workers to be overutilized while others were underutilized. This misalignment of load added latency (especially at p-90 and above) and reduced throughput. Since the load balancer was working with limited utilization information about the Inference Workers to fan out the incoming inference requests, it relied on either round robin or randomly assigned inference to workers. It was possible to oversaturate the workers to keep them all busy and avoid idle workers, but this sometimes resulted in long backlogs on some workers. Heterogeneous generative AI workloads exacerbated this problem - for example, Stable Diffusion inference time can be drastically different depending on the number of steps or image size you are creating.

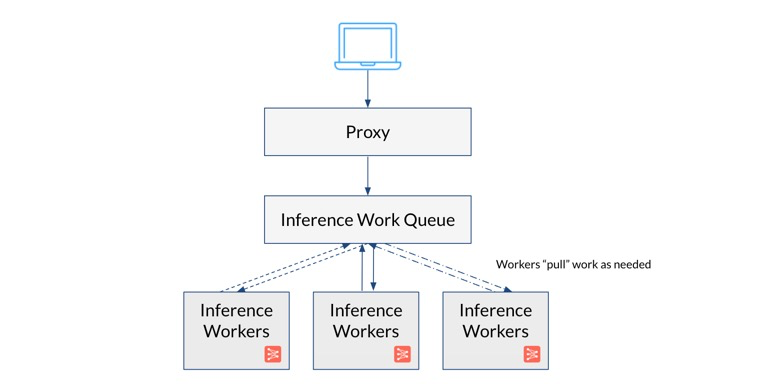

“Pull”-based Routing

We can reduce latency and improve utilization by storing work requests in a queue. In this model, the proxy tier offloads requests to a FIFO queue. The inference workers pull requests from the queue whenever they are idle. This “pull” approach improves utilization because idle workers immediately fetch available work from the queue. In the push model, the workers were forced to wait for a request to percolate through the load balancer. Although the queue itself adds some latency, the pull approach is a latency win overall because it avoids creating hot spots at the worker fleet. For comparison, a typical queue latency is 50 ms, whereas the penalty for landing on a busy worker node is multiple seconds of inference time.

One unresolved question is how responses get returned to the proxy. One approach is to use a separate queue for passing back responses. This approach would work for image generation, but doesn’t generalize well to workloads that utilize streaming requests or replies (e.g., text generation via LLMs). To support streaming request patterns, we split request handling into two phases. In the first phase, we use the queue to select an inference worker for a given request. In the second phase, we pass the request to the worker using regular HTTP. In this manner, we can support arbitrary HTTP requests (including streaming responses, chunked encodings, etc.), while improving the performance and utilization of the fleet.

In the future we’re looking to expand this framework with additional features such as inference priorities, SLA deadlines, retries and cache-aware routing.

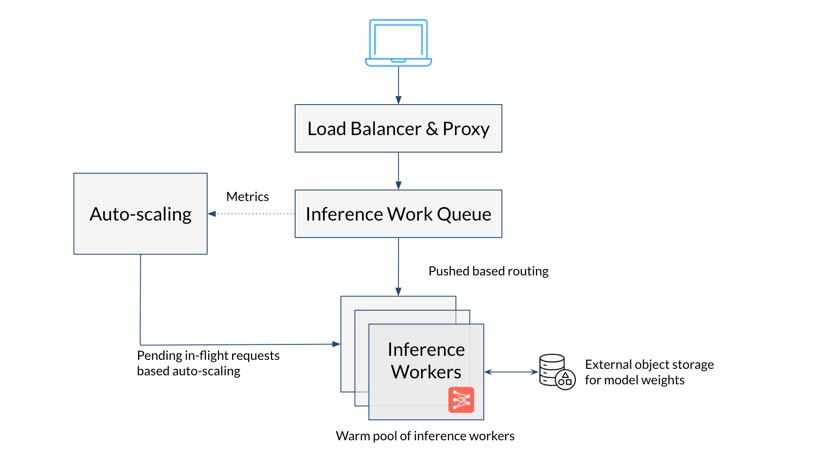

Auto-scaling

Automatic scaling is crucial for deploying scalable and cost-effective systems. As client traffic increases or decreases, our inference platform needs to both scale from and to zero replicas and scale up to infinite replicas (or a max number defined by the customer). This was a classic fleet utilization problem that requires optimally managing the fleet of inference workers to meet fluctuating demand, with the added twist of scale-to-zero.

Over-provisioning workers leads to wasted computational power and increased costs, especially when using expensive modern GPUs, while under-provisioning could result in slow response times, degraded quality of service, or even service outages. Additionally generative AI workloads can be unpredictable with fluctuating demand, requiring the system to quickly adjust its resource allocation.

Generative AI workloads also tend to have poor concurrency - they have limited ability to perform multiple tasks simultaneously on a single GPU. Typically a GPU can only work on one or a small number of inference requests at once, so concurrency needs to be handled via auto-scaling by having more GPUs available.

We considered various metrics and techniques to control auto-scaling. Watching GPU-utilization in the workers was suboptimal because we have no utilization when there are zero pending requests. Instead we settled on using the “logical utilization” of the system to make scaling decisions. This is made up of the number of in-flight requests, the system’s current capacity and a configurable load factor.

We also considered how to avoid overreacting to transient signals. With our current auto-scaling algorithm, we can now acquire consistent signal over several time periods before making an auto-scaling decision. Additionally, we limit the size of in-flight scale-up operations to be equal to the number of current replicas. This in effect, at most doubles the number of Inference Workers in any scaling window so that the auto-scaling algorithm does not greedily over-react to a growing queue.

Looking to the future, we are investigating having different classes of services for auto-scaling. For example, our customer may have multiple tiers of users, free/paid/enterprise, and may want to provide different latency constraints for each tier. We are also exploring an alternate scaling mechanism for batch scaling vs. real-time inference scaling to accommodate different demands.

Cold starts

Smart auto-scaling gets us Inference Workers when we need them and terminates workers when we don’t but it doesn’t make new workers immediately available for inference. When a new container needs to be started, there's a "cold start" latency involved. This is the time taken for the container image to be pulled from a registry, updated and instantiated, model weights loaded into GPU memory and other tasks required to ready the container for handling inference requests. This can be a time-consuming process, especially for generative AI containers, and during this period, the worker queue may continue to build up. Would it be possible to make new Inference Workers ready to take on work as soon as possible?

Cold starts are a general problem for many kinds of on-demand infrastructure. However, the problem is particularly acute for deep learning due to the sheer size of modern models, which can be in the 10s of GBs. We commonly hear from customers that their cold starts are taking 5+ minutes. With OctoAI infrastructure, we can typically reduce this overhead to less than a minute.

Consider a basic approach to starting up a node to host ML infrastructure:

Acquire an EC2 node with an appropriate GPU

Download and start the model container

Download model weights and other assets (like LoRAs)

Each of these steps by themselves can require minutes. As such, we need to attack all these sources of overhead to achieve sub-one minute cold starts.

Warm pooling

Acquiring and booting a virtual machine is a slow operation. We typically observe 2-3 minutes of latency in AWS for this operation. As such, it is imperative to have some amount of warm capacity on hand. We maintain a warm pool of nodes across our various supported instance types. A key advantage of OctoAI is that our warm pool is shared across multiple tenants. This allows us to amortize the cost of the warm pool while still providing burst allocations in the vast majority of cases. Of course, OctoAI customers don't need to think about the warm pool - this is a feature we provide automatically.

Optimized download of model weights

Model weights are typically the single largest contributor to model size. Unlike code assets (Pytorch, etc.), model weights are specific to a model and therefore difficult to prefetch. Furthermore, model weights are often fetched on startup (from Hugging Face or a private repo). To optimize weight fetching, we rely on an abstraction called a volume, which is a persistent storage device whose lifetime is decoupled from the replica. Volumes are used in two phases. During container building, the user prepares the volume by copying weights and other large assets into the volume. At runtime, the volume is mounted into a user-specified directory. Volumes are ultimately backed by an object store (S3 in our case). During replica startup, we transparently fetch the volume’s content from storage in an optimized way, allowing us to achieve near-wire speeds of 1GB/sec or much higher on modern hardware.

We initially envisioned volumes as a mitigation for model weights. However, volumes have proven applicable for other types of large assets. For example, NVIDIA TensorRT facility requires a compilation phase that is expensive for large models. When running such models, we cache the output of TensorRT compilation in a volume during model build. During runtime, we redirect TensorRT to consume the pre-optimized model binary from within the volume directory.

Prefetching of code assets

Once we've optimized for weight download, we still need to worry about code dependencies such as PyTorch. Although typically smaller than the model weights, code dependencies are slow to download and unpack due to inefficiencies in the Docker protocol (e.g., serial downloads per layer). As a rule of thumb, we commonly observe container image fetches operate 10X slower than an optimized download from raw S3.

One advantage we have for code assets is that there is a large amount of reuse. Many folks these days build on PyTorch and a small number of popular helper libraries (HF transformers, etc.). To optimize for reuse, we maintain a set of common docker images with associated "layers'' that encode ML dependencies. Our warm pool nodes prefetch these base layers in anticipation of future use. We cannot fetch the custom part of the user's model. However, the "bespoke" part of the model is typically a small fraction of the overall bytes (10% or less in our experience). Therefore, image prefetching addresses the bulk of the download cost for model code assets.

One challenge with caching docker layers is that the caching behavior is order dependent. For example, suppose the cached image installs library A and then library B. A separate container image uses the same dependencies but reverses the installation order. These two images cannot share cache layers, even though the final result on disk may be exactly the same. Our documentation and SDK tooling guides folks towards docker base images that work well with our caching strategy. In the future, we may explore different deduplication (block-level / chunk-level) that remove ordering dependencies as a concern.

OctoAI GA coming soon

OctoAI’s current architecture supports intelligent request routing to fully utilize the entire worker fleet, efficiently auto-scale workers only when needed and makes new workers ready by minimizing their cold start. OctoAI is ready for large scale production traffic and we are moving to General Availability at the end of October.

Your choice of models on our SaaS or in your environment

Run any model or checkpoint on our efficient, reliable, and customizable API endpoints. Sign up and start building in minutes.