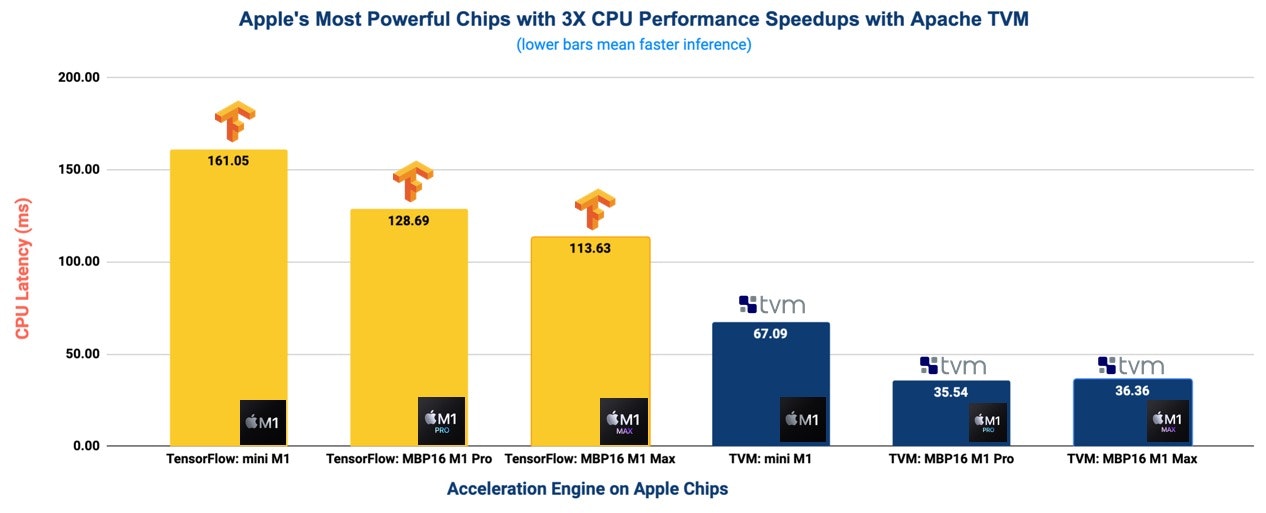

OctoML, the company behind the leading Machine Learning (ML) Deployment Platform, has recently spearheaded ML acceleration work with Hugging Face’s implementation of BERT to automatically optimize its performance for the “most powerful chips Apple has ever built.” OctoML is able to provide an average 3X speedup over Apple’s TensorFlow plugin by leveraging its expertise in Apache TVM, the open source stack for ML performance and portability. The details of these technical achievements will be presented at TVMCon, the open source ML acceleration conference on December 17th.

OctoML’s speedups present a breakthrough in how state of the art AI applications can be deployed on consumer devices like MacBooks and Mac minis. For example, BERT (a natural language processing model) can provide high performance (i.e. low latency ML model inference) AI prediction to consumers without taxing the CPU and battery life for other user needs. This optimal AI experience can be delivered to Apple customers without needing to break the bank. Consumers can get optimal performance without needing to purchase Apple’s high end devices; i.e. they won’t need to buy 32 core GPU machines to fully experience mainstream AI applications. Additionally, OctoML ML model optimizations enable CPU-only execution, which frees up the MacBook and mini GPUs for the best possible performance for video editing, gaming and other graphics needs.

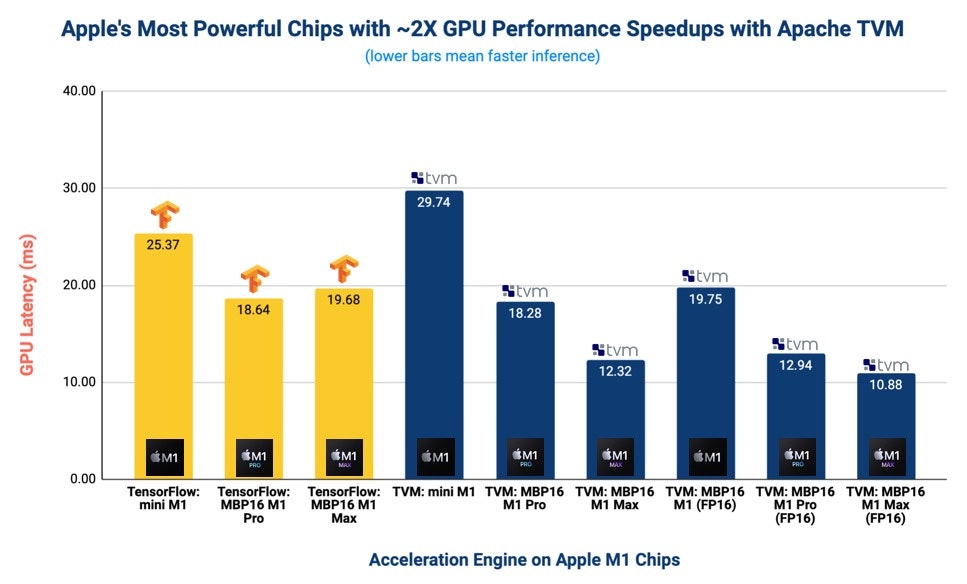

Developers — benefiting from inference latencies under 40 milliseconds — can now be confident that they can create new intelligent AI applications with state of the art performance delivered to consumer devices. Today we’re enabling Apple laptops, but in the future all Apple silicon powered devices will be supported. They can also count on both Apple and OctoML continuing to rapidly deliver performance advancements over time. Already the combination of these two companies innovating has resulted in a 2X speed-up of the BERT model in less than a year! see OctoML’s previous work here

OctoML’s efforts with Apache TVM are also relevant to other silicon makers and hardware providers seeking the same outcomes for their platforms. Putting these M1 efforts in context for them, it was only a few weeks after the original M1 was released that OctoML — without having had advance access to the new Apple hardware — was able to get Apache TVM operating on M1 silicon and providing higher performance than Apple through its own acceleration techniques. A year later, all the advancements in Apache TVM in code generation, auto-tuning, and metal integration mean that the same code from our last M1 evaluation provides state of the art performance on the two newest versions of Apple silicon: the Apple M1 Pro and M1 Max.

OctoML with its focus on choice, automation and performance ensures that these speedups are happening across the board on all CPU architectures such as Arm, AMD and Intel as well as GPU architectures like NVIDIA and Apple.

In addition to providing performance for high-end applications on CPUs, OctoML’s speedup efforts offer improved performance on GPU where ML inference can be served up at an incredible 10.88 milliseconds on a 16” MacBook Pro with a M1 Max processor using quantization (to fp16) with just two lines of additional code. The same technique allowed the older M1 chip on a Mac mini to run inference on this model at 19.75 milliseconds. Apache TVM provides an approximately 2X acceleration over Apple-optimized TensorFlow which executes at 19.68 milliseconds on the same hardware. Overall the Apache TVM speedups are somewhere between 5 to 10X improvement over Apple’s CoreML where the inference speeds are a little above 100 milliseconds latency for all M1 chips.

Want to learn more and earn a chance to win a new 14” M1 MacBook Pro? Register and attend the free open source conference for ML acceleration, TVMCon takes place December 15-17.

Here's the public repo where we've open-sourced our benchmarking process — check it out, share your thoughts, and join the TVM community if you are interested in enabling access to high-performance machine learning anywhere for everyone.

Related Posts

OctoML has raised $85M led by Tiger Global Management, with participation from existing investors Addition, Madrona Venture Group and Amplify Partners.

Apple’s release of an Arm-based chip, called the M1, was a seismic shift in the personal computing landscape.