We’re excited to announce the launch of OctoAI Text Gen Solution, the fastest and easiest way to consume open source LLMs from your applications. Starting today, you can build on your choice of Llama 2 Chat, Code Llama Instruct and Mistral Instruct models, across 7B, 13B, 34B and 70B model sizes, all powered by OctoAI’s best in class compute infrastructure. OctoAI Text Gen Solution offers industry leading token prices and speeds; and when it comes to accuracy, open source software (OSS) LLMs are now giving closed-source LLMs a run for their money!

With the OctoAI Text Gen Solution, you can:

Run inferences against multiple sizes and variants of Llama2 Chat, Code Llama Instruct and Mistral Instruct models, all against one unified API endpoint

Benefit from the best prices and the lowest latency available for OSS LLMs today

Reliably launch and scale your application with the proven reliability of the OctoAI platform, which already processes millions of customer inferences every day

The LLM landscape is changing almost every day, and we need the flexibility to quickly select and test the latest options. OctoAI made it easy for us to evaluate a number of fine tuned model variants for our needs, identify the best one, and move it to production for our application.

Matt Shumer, CEO @ Otherside AI

Product walkthrough: OctoAI Text Gen Solution

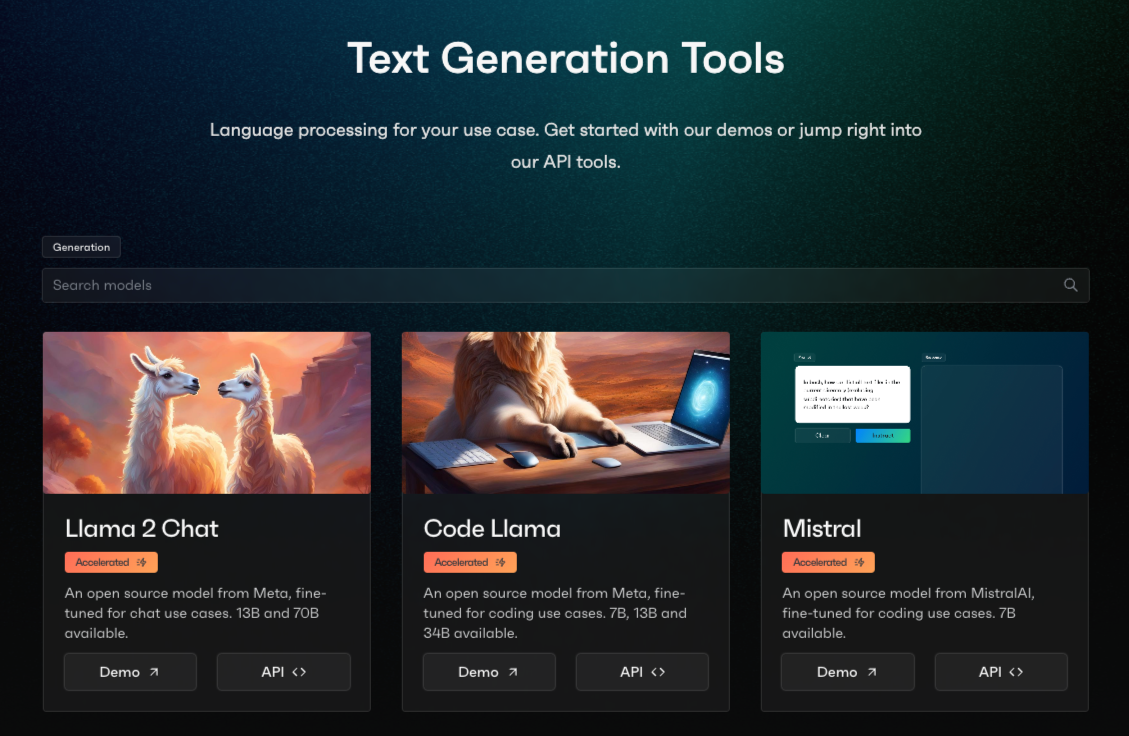

To get started, head over to the OctoAI Text Gen Solution homepage, where you can pick from different open source LLMs to run. Sign up for an account if you don't already have one, and get $10 of free credit.

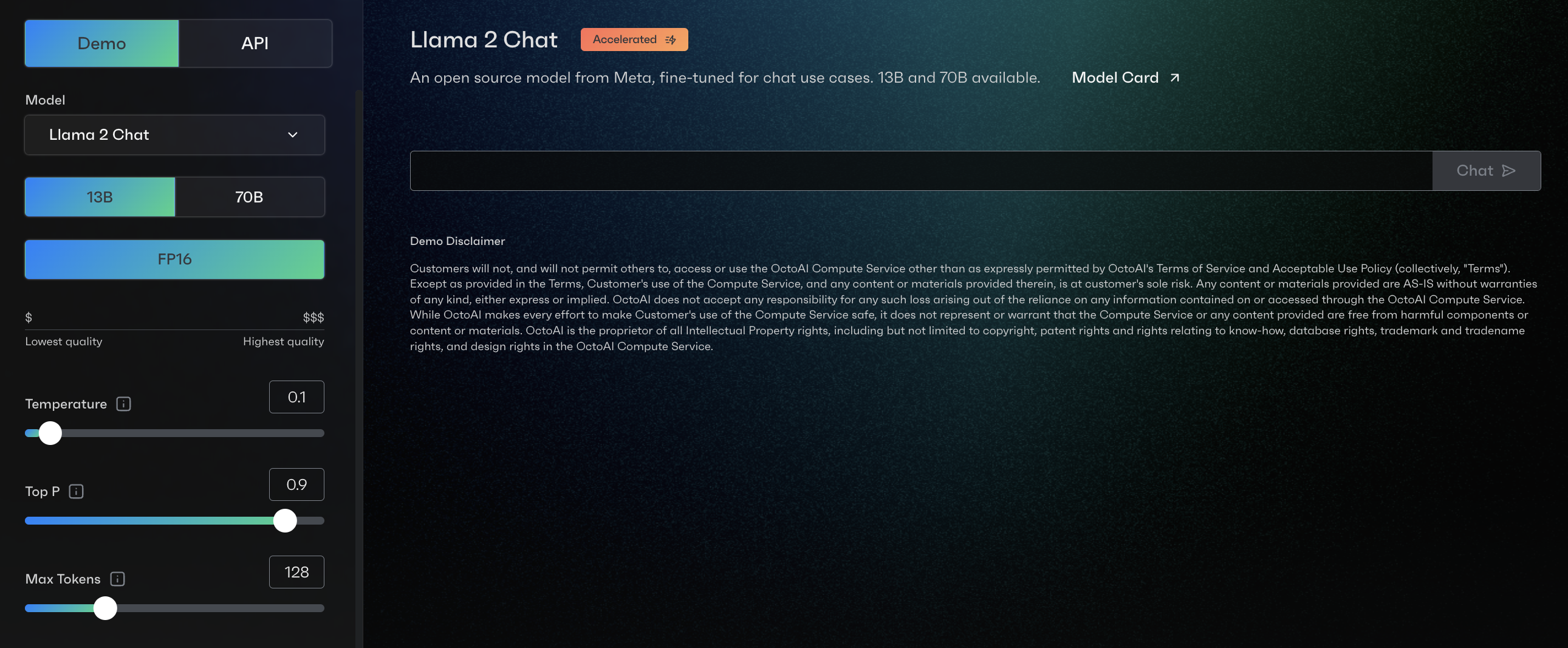

We'll pick Llama 2 Chat. Each model has an interactive demo you can play with to tweak things, and an API page for working with OctoAI endpoints.

Using the pane on the side, you can customize your model by choosing a size, quantization method, and a few parameters like Temperature and Max Tokens. For each change, you’ll see prices and max token length update in real time so you can make quick and educated configuration decisions. For testing, the model screen has a simple chat box where you can prompt it for quick responses. After each response, OctoAI displays statistics about the request, such as tokens/seconds and time to first token.

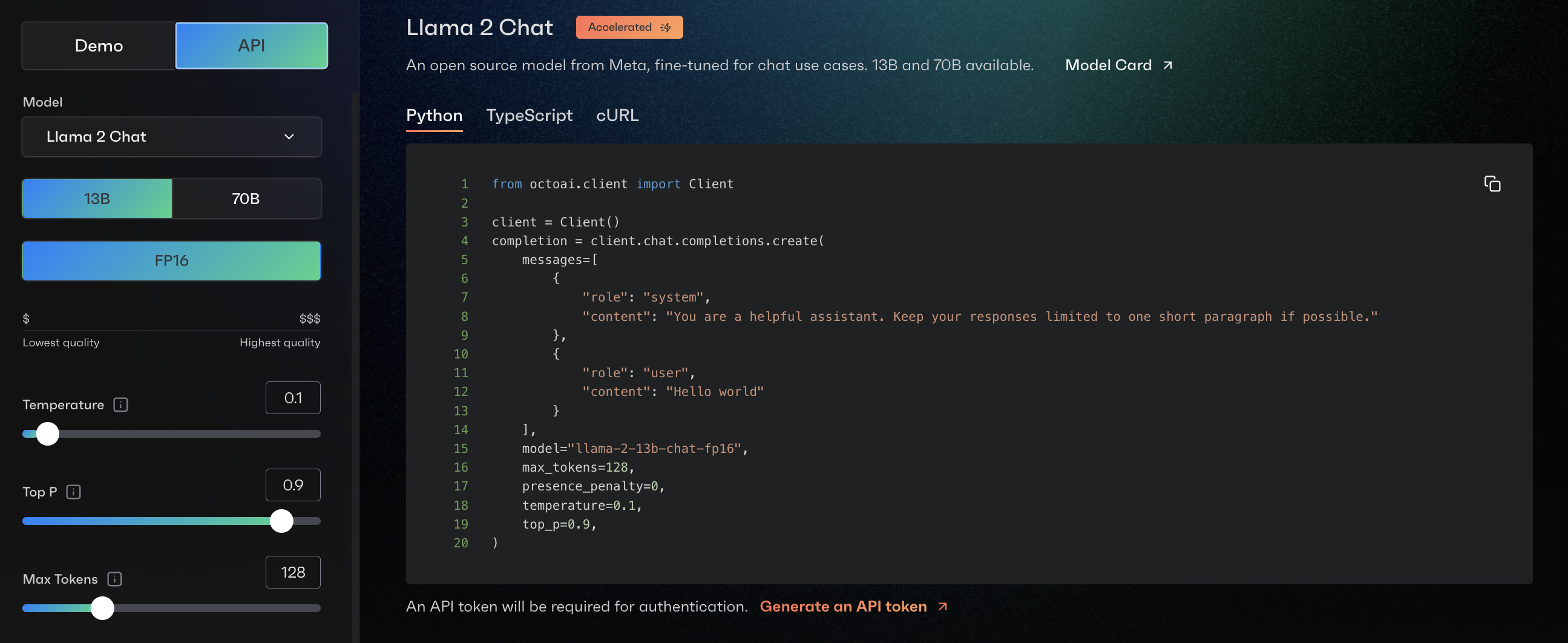

The API screen describes how to use our endpoints in your application. You can easily get started by copying and pasting the API requests in Python, TypeScript, or cURL, available in the UI. Using the OctoAI Text Gen Solution from your existing python based LLM application can be as simple as changing just three lines of code, as shown in this demo video. When you need to scale your application, all you have to do is continue sending inferences to the same API endpoint. OctoAI handles the infrastructure scaling needed to support your on-demand changes in usage, with no upfront pre-provisioning or service degradation.

Building on the scalability, efficiency and reliability of the OctoAI platform

The OctoAI Text Gen Solution builds on the efficient OctoAI compute service and our model acceleration capabilities This includes infrastructure improvements like distributed inference across multiple GPUs, and serving layer improvements including paged attention, flash attention, and multi-query attention. Initial testing with full FP16 precision Code Llama 34B Instruct model showed token output rates as high as 169 tokens per second, we will be publishing more detailed test results across models and sizes in the coming days.

We will also be adding more optimizations in the coming weeks, including query batching and the use of the powerful (and hard to get!) NVIDIA H100 GPUs which we have secured through our cloud provider relationships. Through these, OctoAI continues to provide builders the broadest range of configuration and optimization options, all while preserving interoperability with developments in the broader OSS community.

Get started with OctoAI Text Gen Solution today

If you are currently using a closed source LLM like GPT-3.5-Turbo or GPT-4 from OpenAI, we have a new promotion to help you accelerate integration of open source LLMs in your applications. Read more about this in the OctoAI Model Remix Program introduction.